Содержание

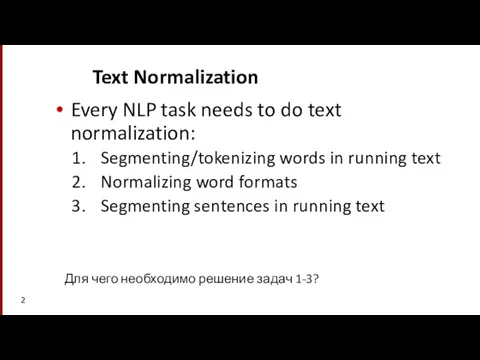

- 2. Text Normalization Every NLP task needs to do text normalization: Segmenting/tokenizing words in running text Normalizing

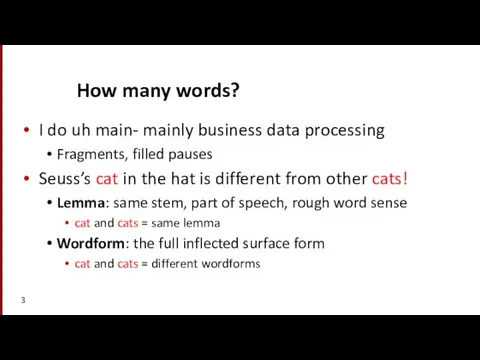

- 3. How many words? I do uh main- mainly business data processing Fragments, filled pauses Seuss’s cat

- 4. Рыбак рыбака видит издалека. Рыбак и рыбака — одна лемма, но разные словоформы. В чем отличие

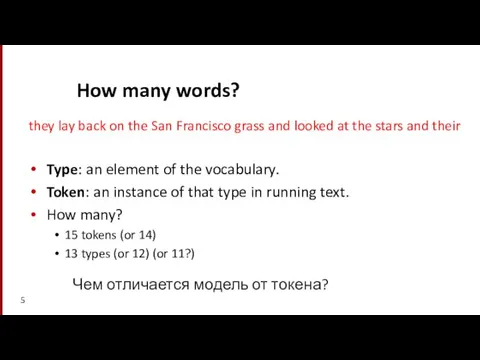

- 5. How many words? they lay back on the San Francisco grass and looked at the stars

- 6. Он не мог не ответить на это письмо. Сколько моделей и токенов?

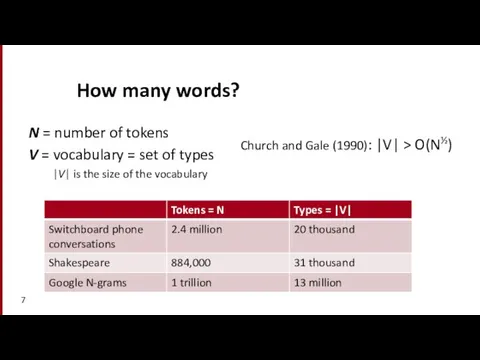

- 7. How many words? N = number of tokens V = vocabulary = set of types |V|

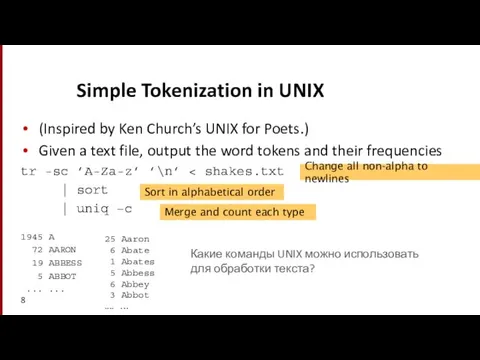

- 8. Simple Tokenization in UNIX (Inspired by Ken Church’s UNIX for Poets.) Given a text file, output

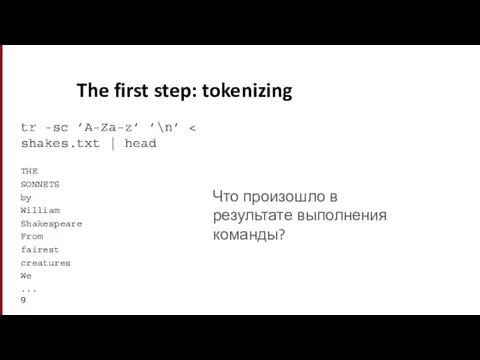

- 9. The first step: tokenizing tr -sc ’A-Za-z’ ’\n’ THE SONNETS by William Shakespeare From fairest creatures

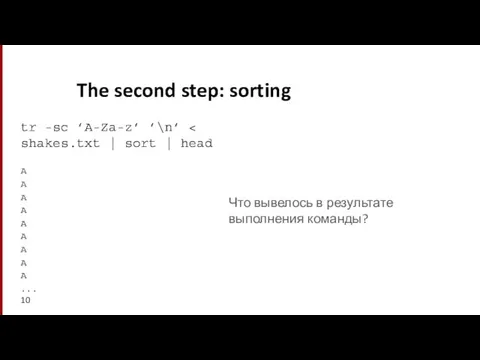

- 10. The second step: sorting tr -sc ’A-Za-z’ ’\n’ A A A A A A A A

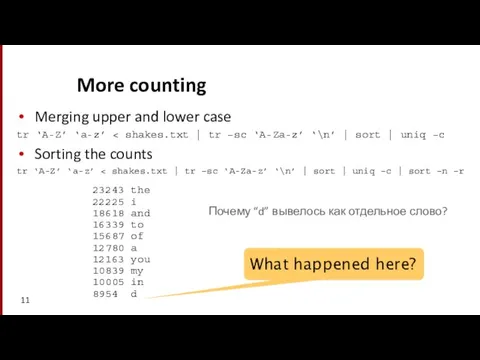

- 11. More counting Merging upper and lower case tr ‘A-Z’ ‘a-z’ Sorting the counts tr ‘A-Z’ ‘a-z’

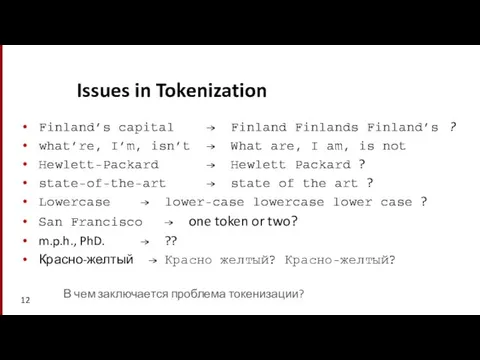

- 12. Issues in Tokenization Finland’s capital → Finland Finlands Finland’s ? what’re, I’m, isn’t → What are,

- 13. Tokenization: language issues French L'ensemble → one token or two? L ? L’ ? Le ?

- 14. Какие проблемы, связанные с особенностями языков, могут возникнуть?

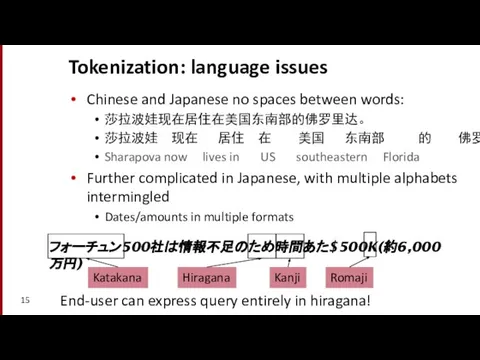

- 15. Tokenization: language issues Chinese and Japanese no spaces between words: 莎拉波娃现在居住在美国东南部的佛罗里达。 莎拉波娃 现在 居住 在 美国

- 16. Какие особенности японского языка еще больше осложняют обработку текста?

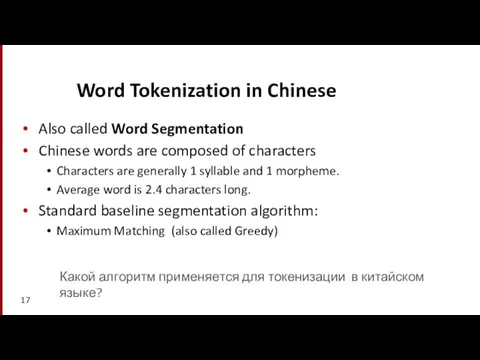

- 17. Word Tokenization in Chinese Also called Word Segmentation Chinese words are composed of characters Characters are

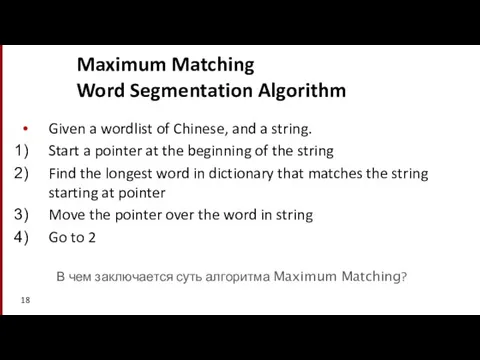

- 18. Maximum Matching Word Segmentation Algorithm Given a wordlist of Chinese, and a string. Start a pointer

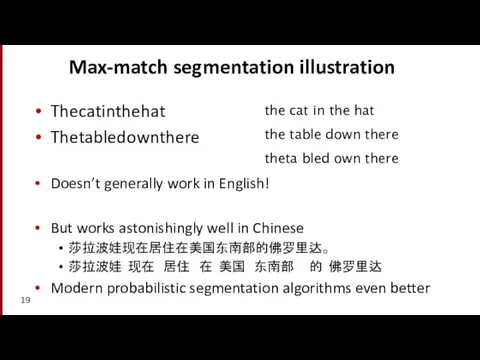

- 19. Max-match segmentation illustration Thecatinthehat Thetabledownthere Doesn’t generally work in English! But works astonishingly well in Chinese

- 21. Скачать презентацию

Лингвистическое путешествие

Лингвистическое путешествие Способи дієслова (6 клас)

Способи дієслова (6 клас) Корпусная лингвистика

Корпусная лингвистика מטלת סיום

מטלת סיום Урок – путешествия существительнойтнень ды прилагательнойтнень коряс

Урок – путешествия существительнойтнень ды прилагательнойтнень коряс Этнолингвистический конфликт в Республике Татарстан: истоки, развитие, нынешнее состояние и перспективы

Этнолингвистический конфликт в Республике Татарстан: истоки, развитие, нынешнее состояние и перспективы Suomen_kielen_26_a_oppitunti

Suomen_kielen_26_a_oppitunti Прикладні аспекти статистичної лінгвістики

Прикладні аспекти статистичної лінгвістики Урок мовленнєвого розвитку

Урок мовленнєвого розвитку Уровни эквивалентности в переводе

Уровни эквивалентности в переводе Автоматизация звука [Р] в слогах в словах

Автоматизация звука [Р] в слогах в словах Description des gens (описание внешности людей)

Description des gens (описание внешности людей) Сунтаар улууһун С.А.Зверев аатынан Тубэй Дьаархан оскуолатын 6 кылааһыгар ыытыллыбыт саха тылынт саха тылын уруогун барыла

Сунтаар улууһун С.А.Зверев аатынан Тубэй Дьаархан оскуолатын 6 кылааһыгар ыытыллыбыт саха тылынт саха тылын уруогун барыла Лингвистика текста и ее место в современной науке о языке

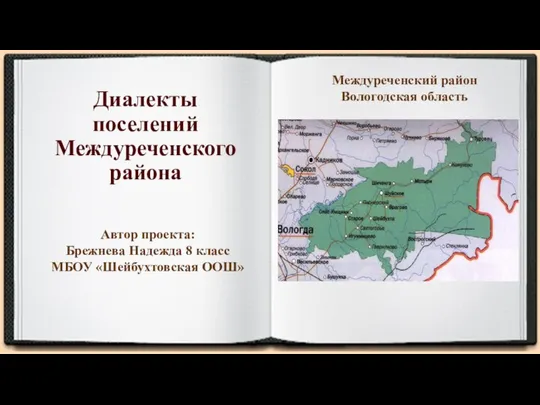

Лингвистика текста и ее место в современной науке о языке Диалекты поселений Междуреченского района

Диалекты поселений Междуреченского района Одежда. 衣服

Одежда. 衣服 Время в китайском языке

Время в китайском языке Терминологические словари и справочники. Школьные учебники и отражение в них научной терминологии

Терминологические словари и справочники. Школьные учебники и отражение в них научной терминологии Oui, je parle Français

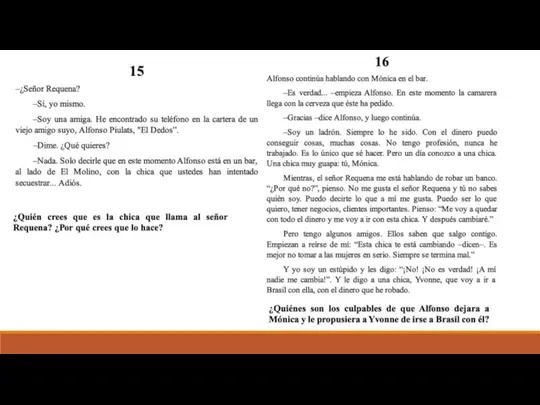

Oui, je parle Français La Chica del tren Capitulo (15-21)

La Chica del tren Capitulo (15-21) Мастер-класс Применение структуры ТОКИН МЭТ на уроках татарского языка

Мастер-класс Применение структуры ТОКИН МЭТ на уроках татарского языка Происхождение языка: обзор различных теорий

Происхождение языка: обзор различных теорий Предложения с глаголом 在 и употребление 在 (занятие 4)

Предложения с глаголом 在 и употребление 在 (занятие 4) Obsługa Klientów oddział I sprzedawca CKZ

Obsługa Klientów oddział I sprzedawca CKZ Языкознание как наука о языке

Языкознание как наука о языке Занятие по китайскому

Занятие по китайскому Tipos de significado lingüístico y clases de palabras

Tipos de significado lingüístico y clases de palabras Урок китайского языка

Урок китайского языка