Содержание

- 2. Copyright © 2010, Elsevier Inc. All rights Reserved Roadmap Why we need ever-increasing performance. Why we’re

- 3. Changing times Copyright © 2010, Elsevier Inc. All rights Reserved From 1986 – 2002, microprocessors were

- 4. An intelligent solution Copyright © 2010, Elsevier Inc. All rights Reserved Instead of designing and building

- 5. Now it’s up to the programmers Adding more processors doesn’t help much if programmers aren’t aware

- 6. Why we need ever-increasing performance Computational power is increasing, but so are our computation problems and

- 7. Climate modeling Copyright © 2010, Elsevier Inc. All rights Reserved

- 8. Protein folding Copyright © 2010, Elsevier Inc. All rights Reserved

- 9. Drug discovery Copyright © 2010, Elsevier Inc. All rights Reserved

- 10. Energy research Copyright © 2010, Elsevier Inc. All rights Reserved

- 11. Data analysis Copyright © 2010, Elsevier Inc. All rights Reserved

- 12. Why we’re building parallel systems Up to now, performance increases have been attributable to increasing density

- 13. A little physics lesson Smaller transistors = faster processors. Faster processors = increased power consumption. Increased

- 14. Solution Move away from single-core systems to multicore processors. “core” = central processing unit (CPU) Copyright

- 15. Why we need to write parallel programs Running multiple instances of a serial program often isn’t

- 16. Approaches to the serial problem Rewrite serial programs so that they’re parallel. Write translation programs that

- 17. More problems Some coding constructs can be recognized by an automatic program generator, and converted to

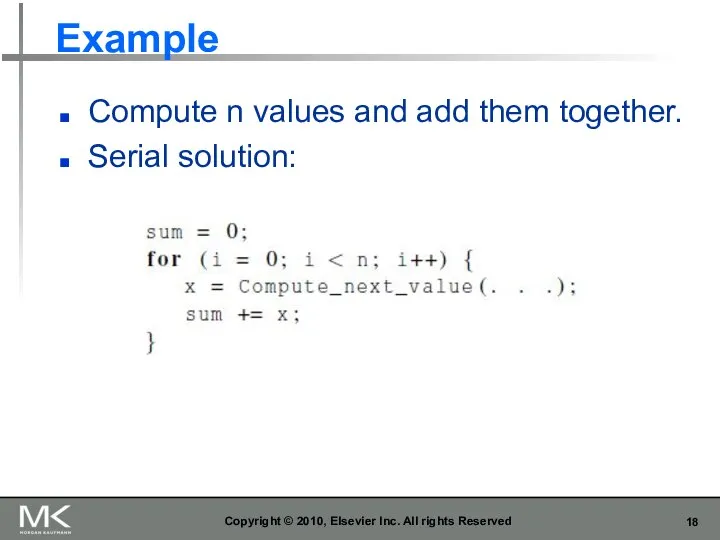

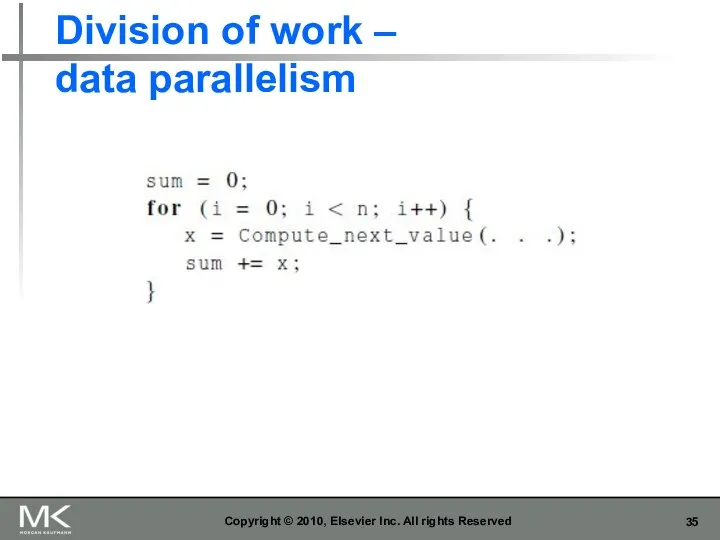

- 18. Example Compute n values and add them together. Serial solution: Copyright © 2010, Elsevier Inc. All

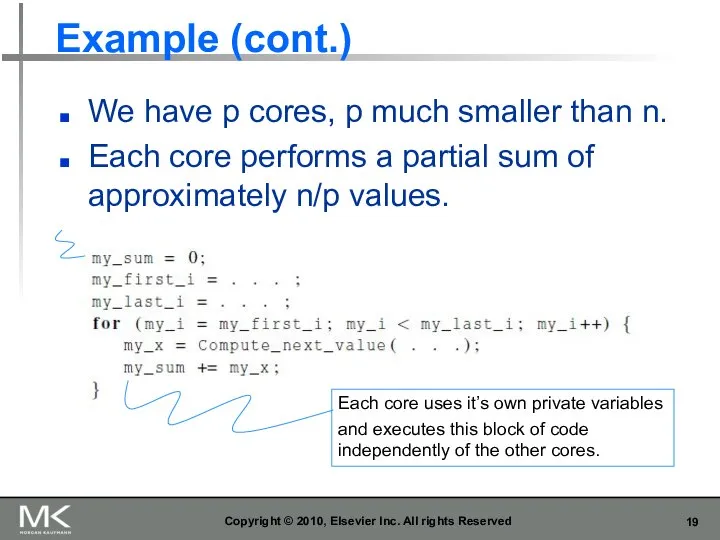

- 19. Example (cont.) We have p cores, p much smaller than n. Each core performs a partial

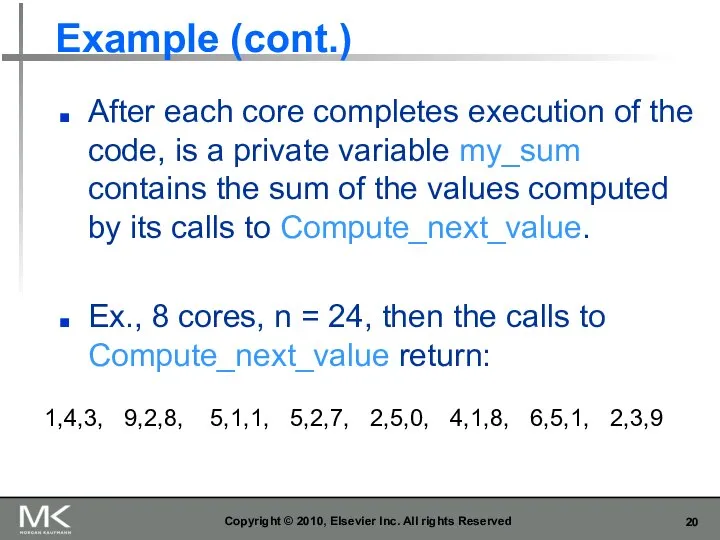

- 20. Example (cont.) After each core completes execution of the code, is a private variable my_sum contains

- 21. Example (cont.) Once all the cores are done computing their private my_sum, they form a global

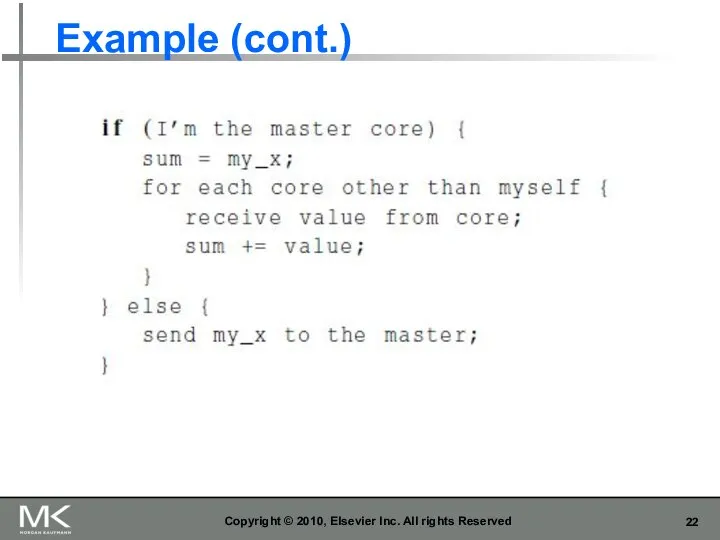

- 22. Example (cont.) Copyright © 2010, Elsevier Inc. All rights Reserved

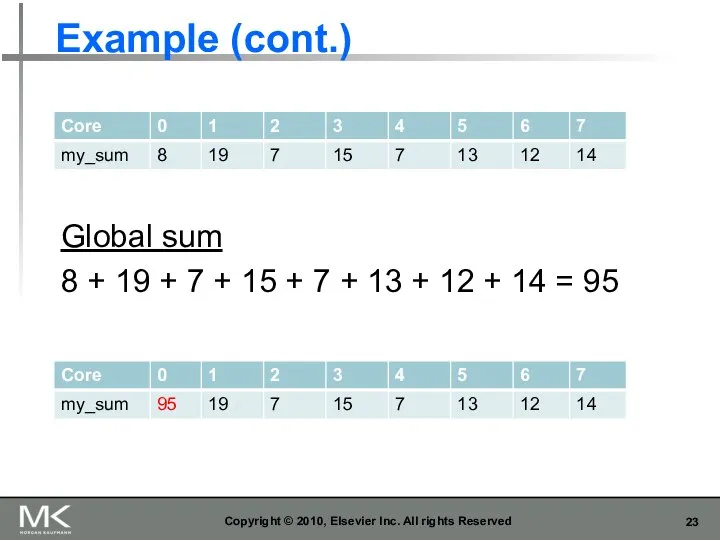

- 23. Example (cont.) Copyright © 2010, Elsevier Inc. All rights Reserved Global sum 8 + 19 +

- 24. Copyright © 2010, Elsevier Inc. All rights Reserved But wait! There’s a much better way to

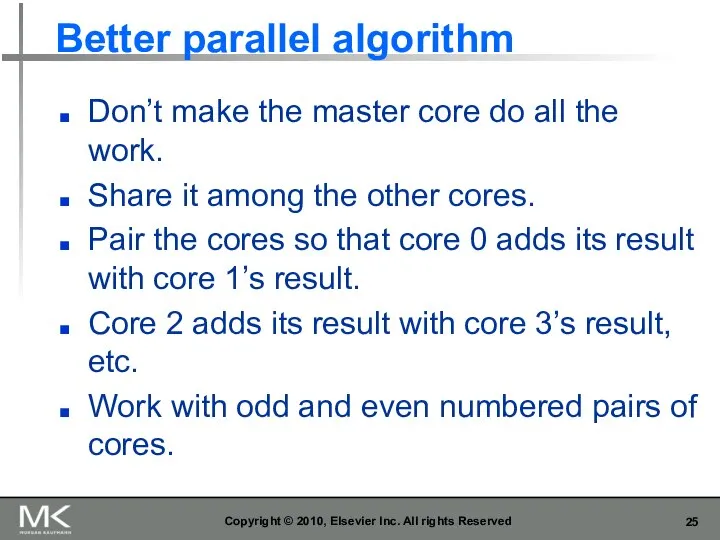

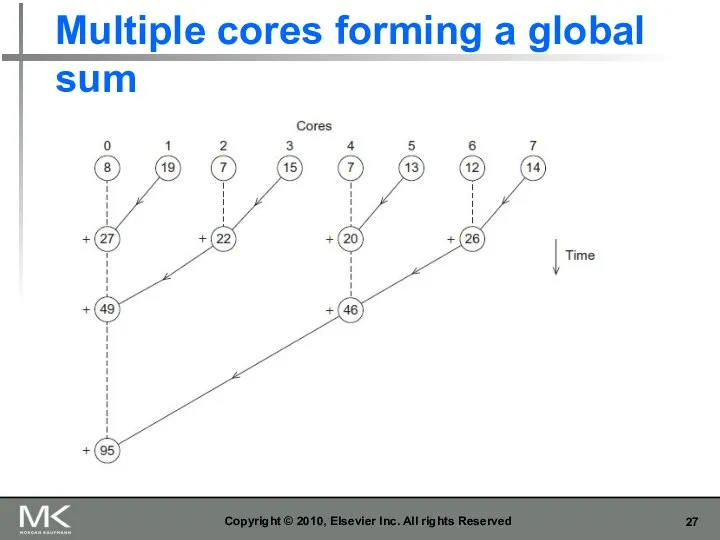

- 25. Better parallel algorithm Don’t make the master core do all the work. Share it among the

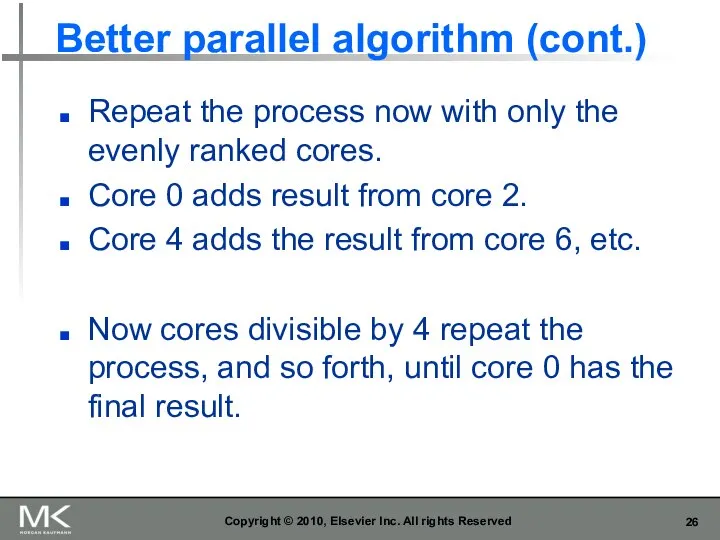

- 26. Better parallel algorithm (cont.) Repeat the process now with only the evenly ranked cores. Core 0

- 27. Multiple cores forming a global sum Copyright © 2010, Elsevier Inc. All rights Reserved

- 28. Analysis In the first example, the master core performs 7 receives and 7 additions. In the

- 29. Analysis (cont.) The difference is more dramatic with a larger number of cores. If we have

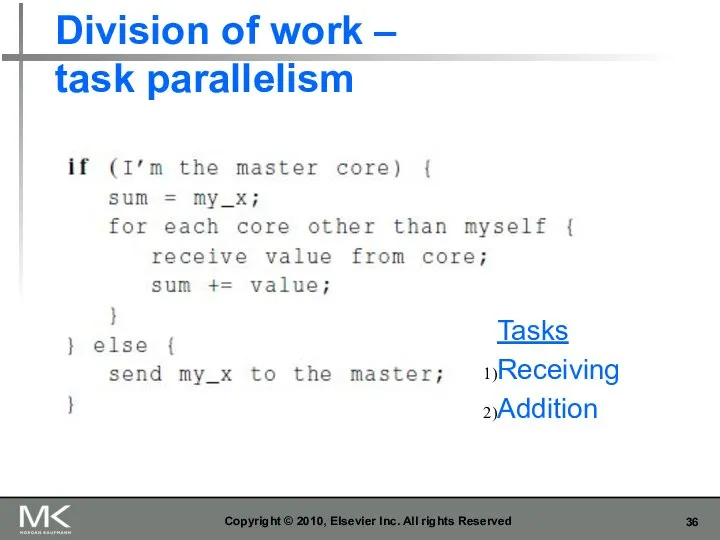

- 30. How do we write parallel programs? Task parallelism Partition various tasks carried out solving the problem

- 31. Professor P Copyright © 2010, Elsevier Inc. All rights Reserved 15 questions 300 exams

- 32. Professor P’s grading assistants Copyright © 2010, Elsevier Inc. All rights Reserved TA#1 TA#2 TA#3

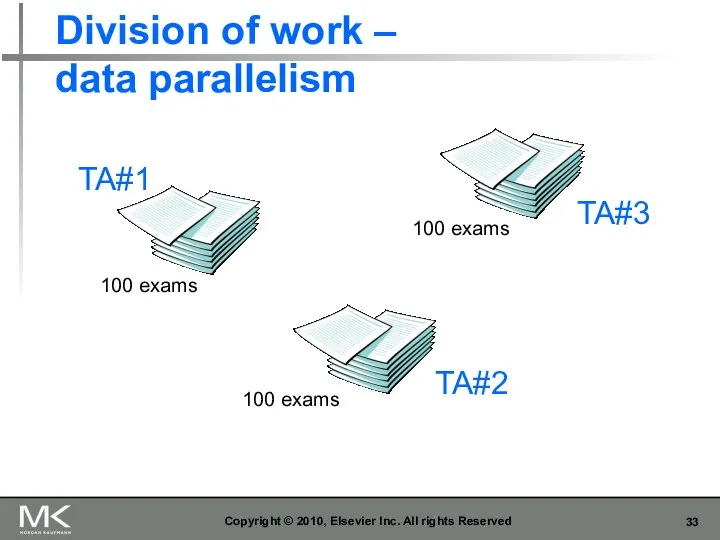

- 33. Division of work – data parallelism Copyright © 2010, Elsevier Inc. All rights Reserved TA#1 TA#2

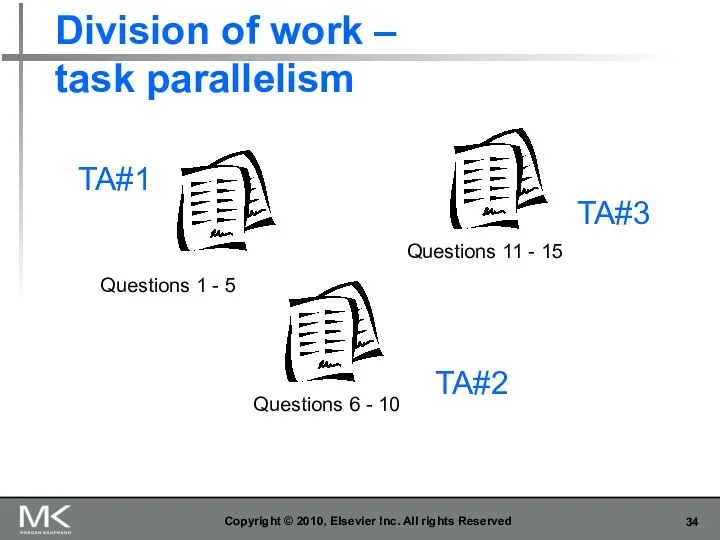

- 34. Division of work – task parallelism Copyright © 2010, Elsevier Inc. All rights Reserved TA#1 TA#2

- 35. Division of work – data parallelism Copyright © 2010, Elsevier Inc. All rights Reserved

- 36. Division of work – task parallelism Copyright © 2010, Elsevier Inc. All rights Reserved Tasks Receiving

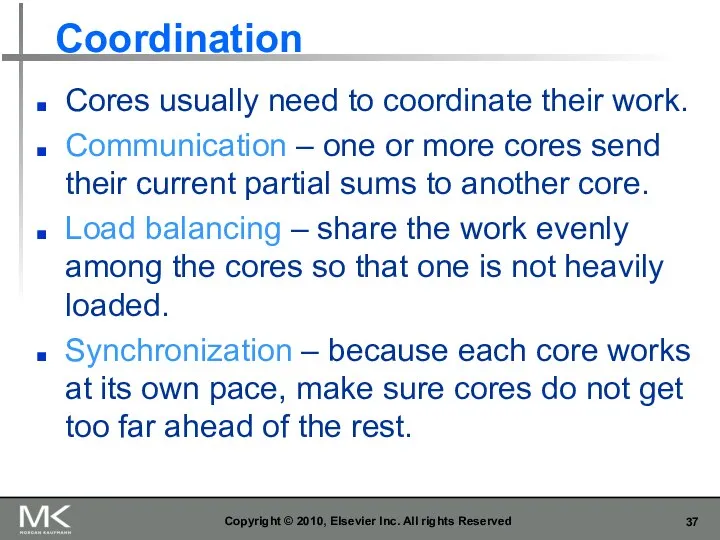

- 37. Coordination Cores usually need to coordinate their work. Communication – one or more cores send their

- 38. What we’ll be doing Learning to write programs that are explicitly parallel. Using the C language.

- 39. Type of parallel systems Shared-memory The cores can share access to the computer’s memory. Coordinate the

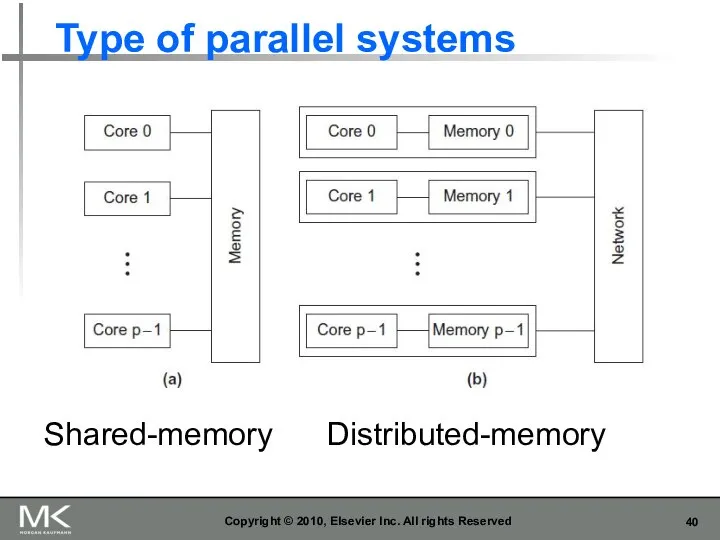

- 40. Type of parallel systems Copyright © 2010, Elsevier Inc. All rights Reserved Shared-memory Distributed-memory

- 41. Terminology Concurrent computing – a program is one in which multiple tasks can be in progress

- 42. Concluding Remarks (1) The laws of physics have brought us to the doorstep of multicore technology.

- 44. Скачать презентацию

Управление проектами

Управление проектами Maket_prezentatsii_Forum_molodykh_spetsialistov_SIBUR_2

Maket_prezentatsii_Forum_molodykh_spetsialistov_SIBUR_2 Неопределённость коммуникации. Проблема отграничения феномена коммуникации

Неопределённость коммуникации. Проблема отграничения феномена коммуникации Поход Тохтамыша на Москву в 1382 г

Поход Тохтамыша на Москву в 1382 г Сравнение Конституции Северной и Южной Кореи

Сравнение Конституции Северной и Южной Кореи 20130203_urok_osennee_nastroenie_v_proizvedeniyah_masterov_iskusstva_0

20130203_urok_osennee_nastroenie_v_proizvedeniyah_masterov_iskusstva_0 20180202_pedagogicheskaya_masterskaya

20180202_pedagogicheskaya_masterskaya Идеология

Идеология Выпуск 2016 года

Выпуск 2016 года 20160113_valentin_ivanovich_shulchev-

20160113_valentin_ivanovich_shulchev- Холодные блюда и закуски из мяса (2)

Холодные блюда и закуски из мяса (2) Оборудование отсеков и системы разгрузки танкера дедвейтом 3800т

Оборудование отсеков и системы разгрузки танкера дедвейтом 3800т Дидактическая игра Картинки – находилки

Дидактическая игра Картинки – находилки Клавиатура с набором клавиш для макросов

Клавиатура с набором клавиш для макросов 20141201_portfolio_individualnogo_soprovozhdeniya_obuchayushchegosya-_chast_3_

20141201_portfolio_individualnogo_soprovozhdeniya_obuchayushchegosya-_chast_3_ Современная электрическая нагрузка квартиры на примере печи СВЧ

Современная электрическая нагрузка квартиры на примере печи СВЧ Интерактивная игра Четвертый лишний

Интерактивная игра Четвертый лишний Электроприборы для кухни

Электроприборы для кухни Палатки

Палатки Окраска панелей масляными составами

Окраска панелей масляными составами Выбор мощностей компенсирующих устройств

Выбор мощностей компенсирующих устройств Устранение рисков ошибочного подключения вилки типа ШК 4х60 к розетке ШЩ 4х60

Устранение рисков ошибочного подключения вилки типа ШК 4х60 к розетке ШЩ 4х60 Заявка об участии в открытом запросе на поиск инновационных решений в области промывки теплотехнического оборудования

Заявка об участии в открытом запросе на поиск инновационных решений в области промывки теплотехнического оборудования Послание к Римлянам

Послание к Римлянам Ремонт електронної апаратури та основи телебачення

Ремонт електронної апаратури та основи телебачення 20170129_proportsii1

20170129_proportsii1 Khroniki_roda_Tikhomirovykh

Khroniki_roda_Tikhomirovykh Топливно-энергетический комплекс РК

Топливно-энергетический комплекс РК