Содержание

- 2. Recap Decision Trees (in class) for classification Using categorical predictors Using classification error as our metric

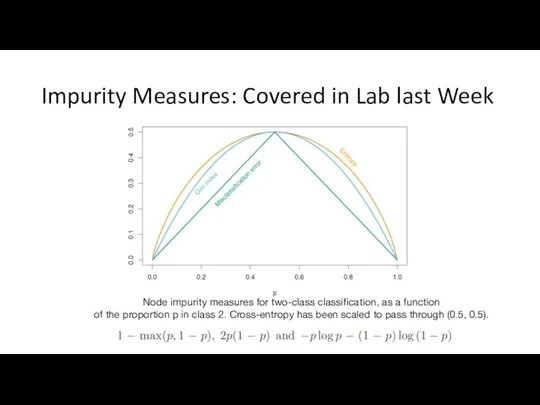

- 3. Impurity Measures: Covered in Lab last Week Node impurity measures for two-class classification, as a function

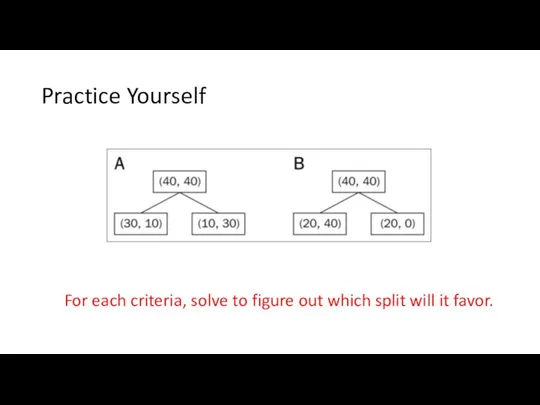

- 4. Practice Yourself For each criteria, solve to figure out which split will it favor.

- 5. Today’s Objectives Overfitting in Decision Trees (Tree Pruning) Ensemble Learning ( combine the power of multiple

- 6. Overfitting in Decision Trees

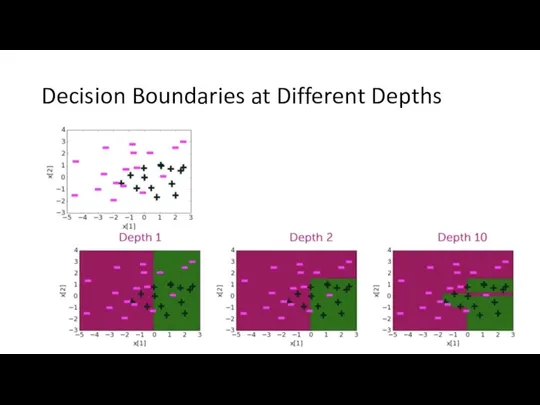

- 7. Decision Boundaries at Different Depths

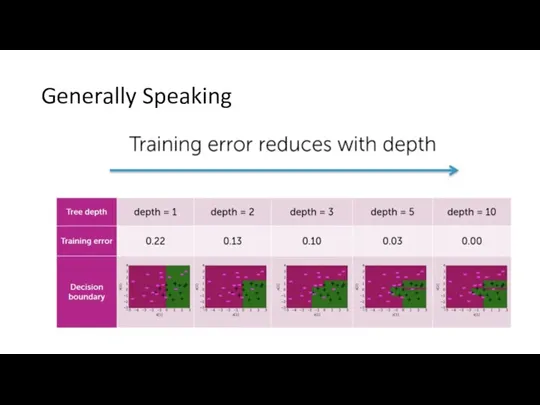

- 8. Generally Speaking

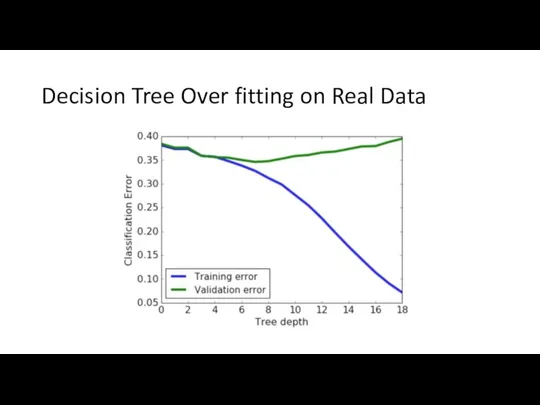

- 9. Decision Tree Over fitting on Real Data

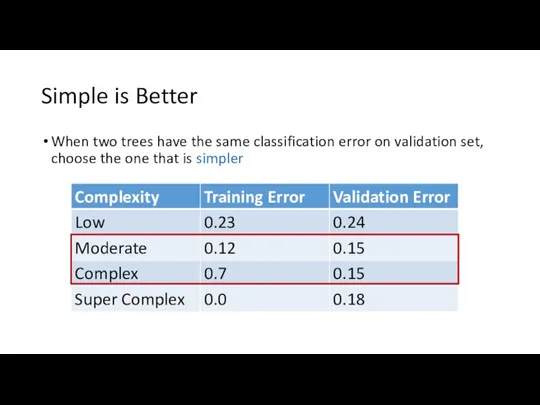

- 10. Simple is Better When two trees have the same classification error on validation set, choose the

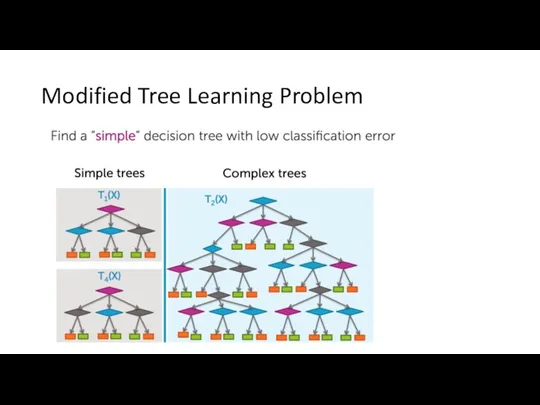

- 11. Modified Tree Learning Problem

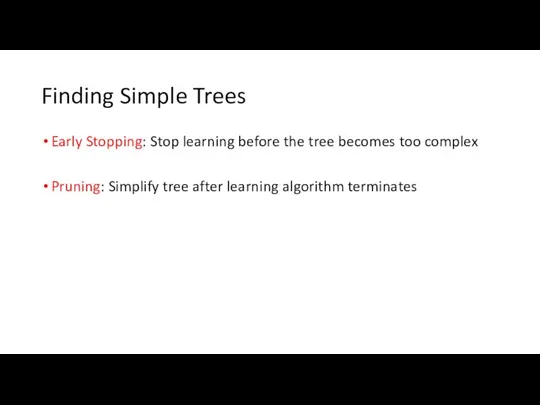

- 12. Finding Simple Trees Early Stopping: Stop learning before the tree becomes too complex Pruning: Simplify tree

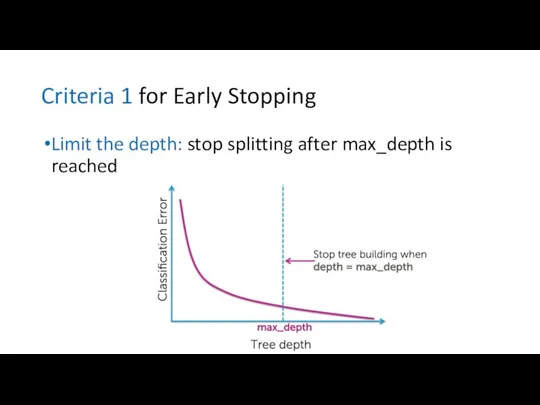

- 13. Criteria 1 for Early Stopping Limit the depth: stop splitting after max_depth is reached

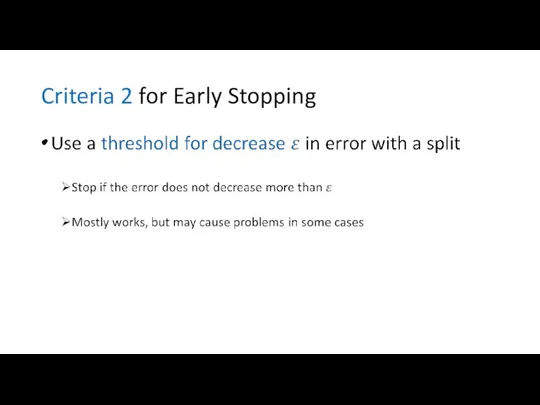

- 14. Criteria 2 for Early Stopping

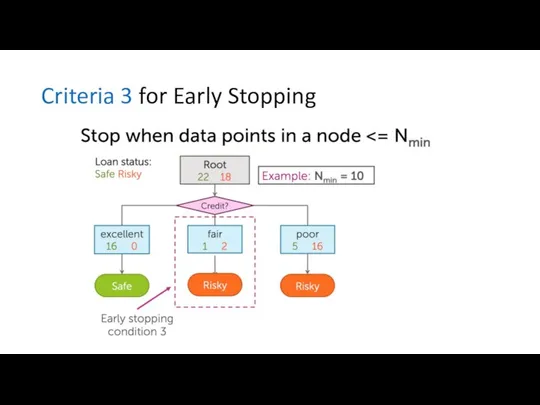

- 15. Criteria 3 for Early Stopping

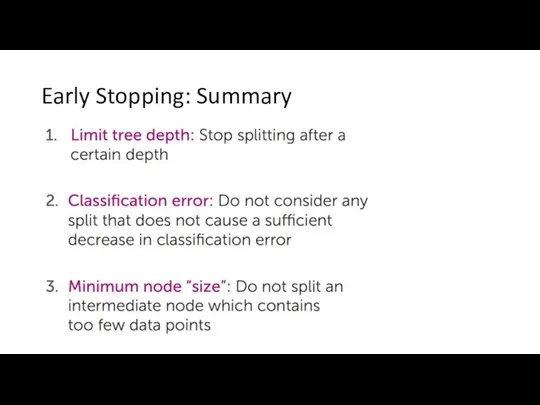

- 16. Early Stopping: Summary

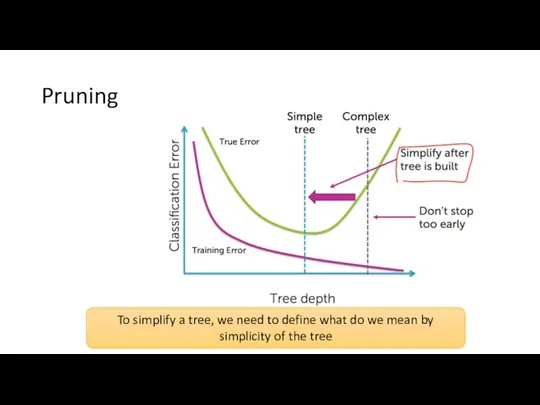

- 17. Pruning To simplify a tree, we need to define what do we mean by simplicity of

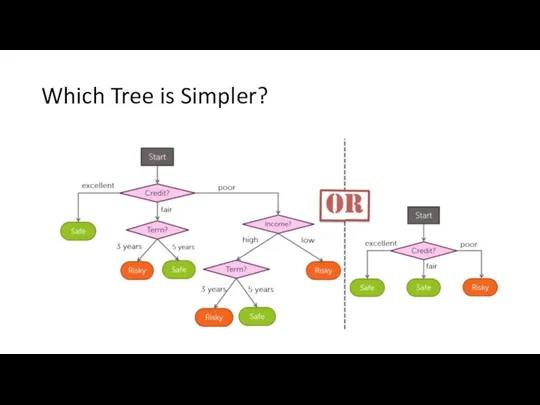

- 18. Which Tree is Simpler?

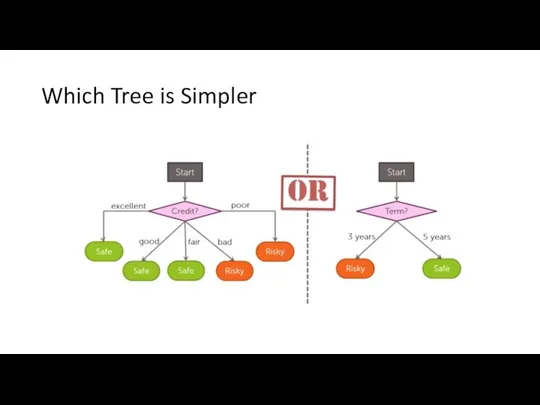

- 19. Which Tree is Simpler

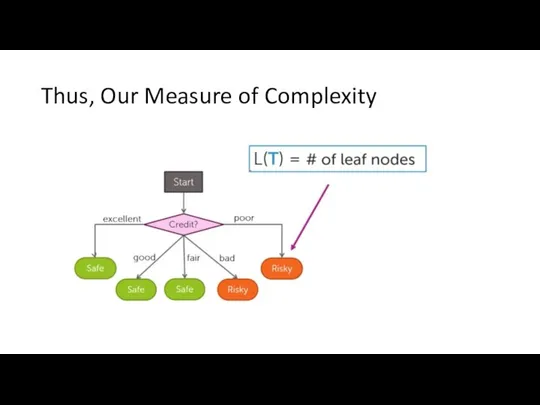

- 20. Thus, Our Measure of Complexity

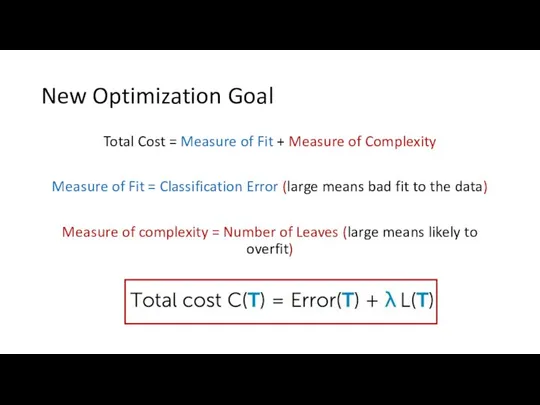

- 21. New Optimization Goal Total Cost = Measure of Fit + Measure of Complexity Measure of Fit

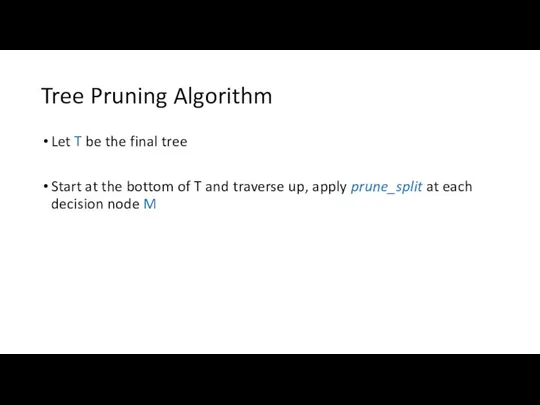

- 22. Tree Pruning Algorithm Let T be the final tree Start at the bottom of T and

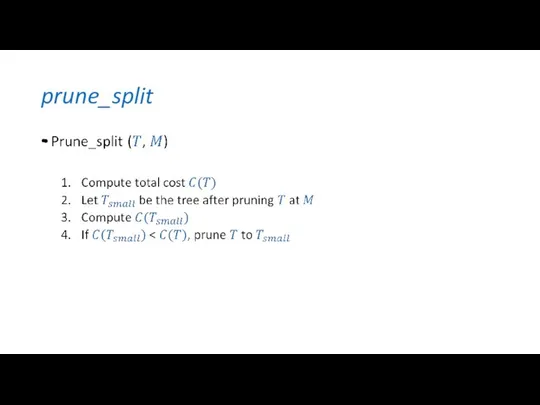

- 23. prune_split

- 24. Ensemble Learning

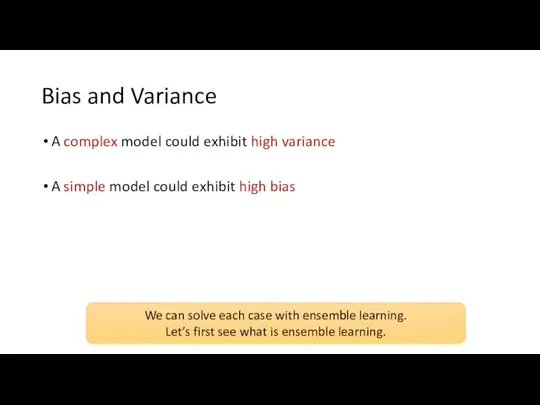

- 25. Bias and Variance A complex model could exhibit high variance A simple model could exhibit high

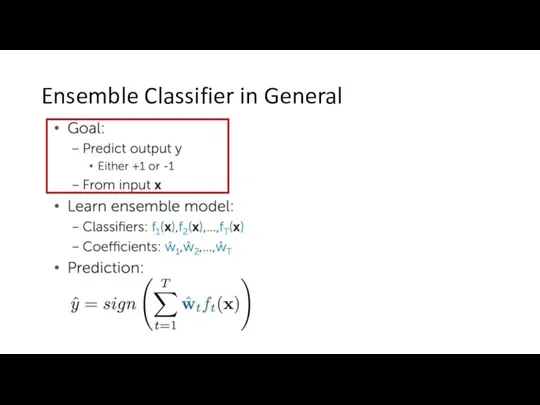

- 26. Ensemble Classifier in General

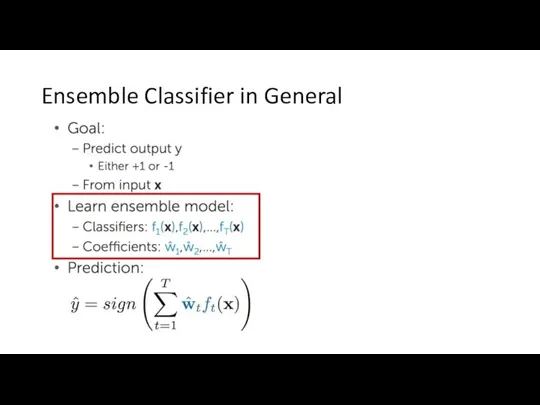

- 27. Ensemble Classifier in General

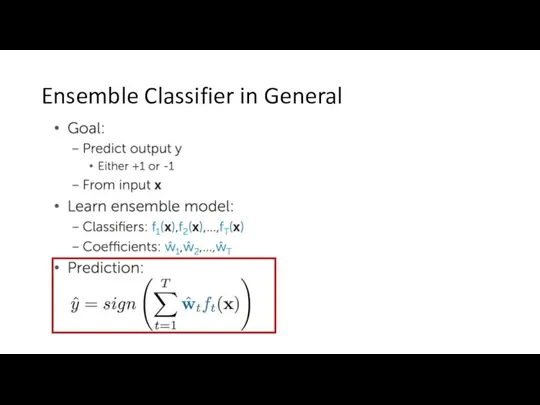

- 28. Ensemble Classifier in General

- 29. Important A necessary and sufficient condition for an ensemble of classifiers to be more accurate than

- 30. Bagging: Reducing Variance using An Ensemble of Classifiers from Bootstrap Samples

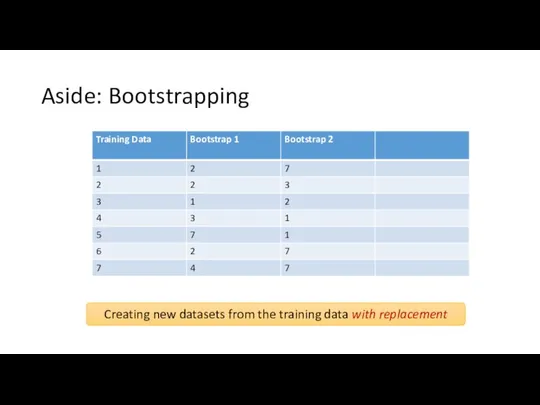

- 31. Aside: Bootstrapping Creating new datasets from the training data with replacement

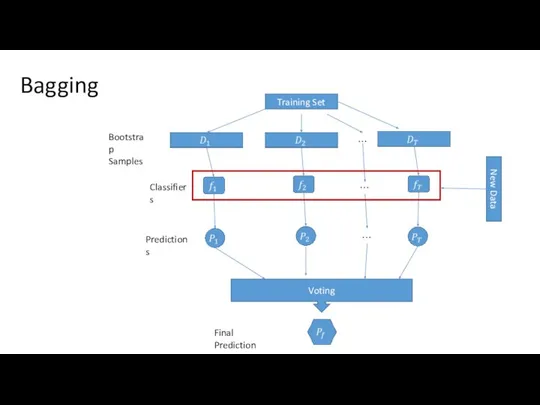

- 32. Training Set Voting Bootstrap Samples Classifiers Predictions Final Prediction New Data Bagging

- 33. Why Bagging Works?

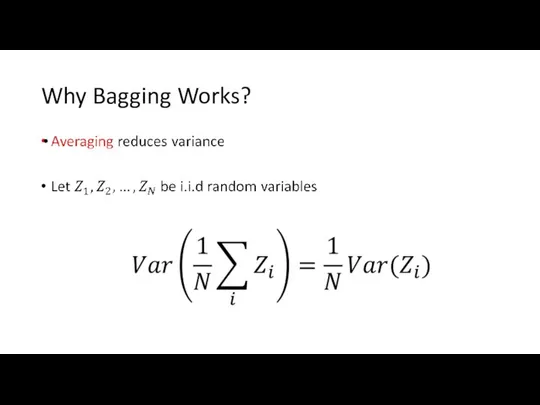

- 34. Bagging Summary Bagging was first proposed by Leo Breiman in a technical report in 1994 He

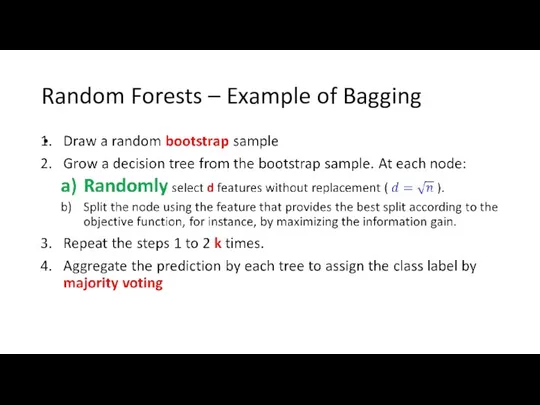

- 35. Random Forests – Example of Bagging

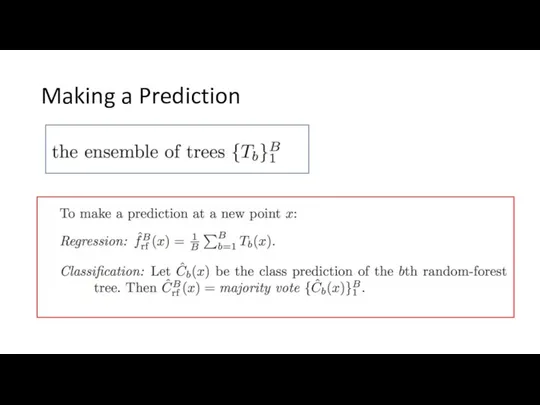

- 36. Making a Prediction

- 37. Boosting: Converting Weak Learners to Strong Learners through Ensemble Learning

- 38. Boosting and Bagging Works in a similar way as bagging. Except: Models are built sequentially: each

- 39. Boosting: (1) Train A Classifier

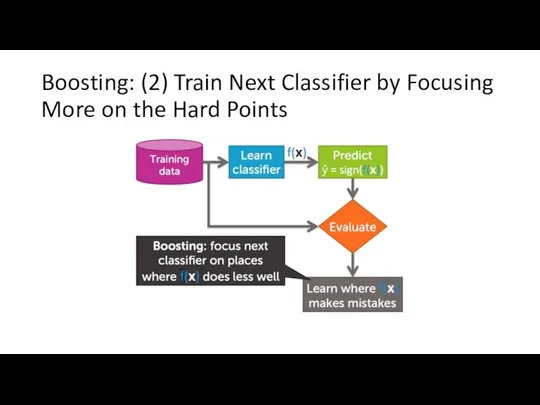

- 40. Boosting: (2) Train Next Classifier by Focusing More on the Hard Points

- 41. What does it mean to focus more?

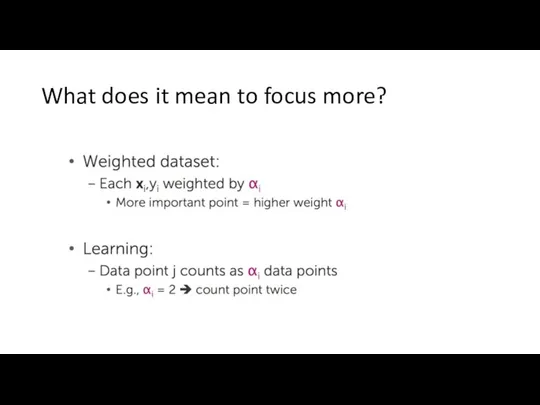

- 42. Example (Unweighted): Learning a Simple Decision Stump

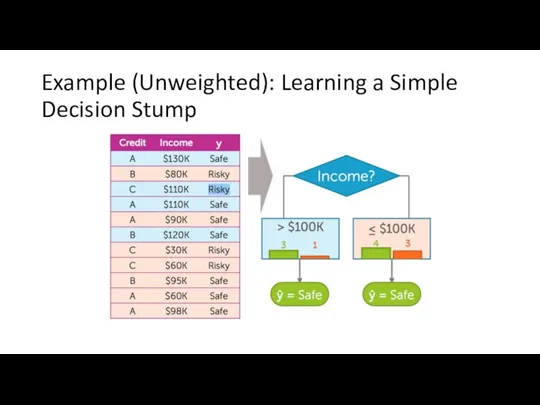

- 43. Example (Weighted): Learning a Decision Stump on Weighted Data

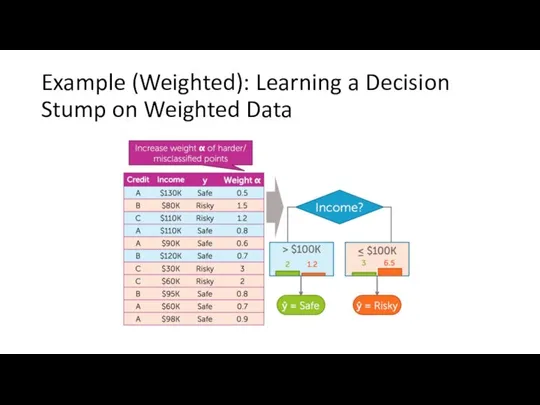

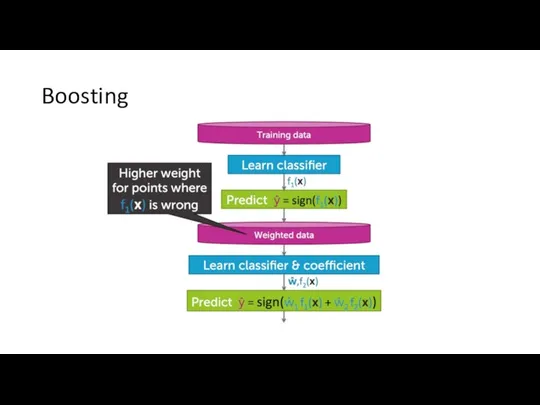

- 44. Boosting

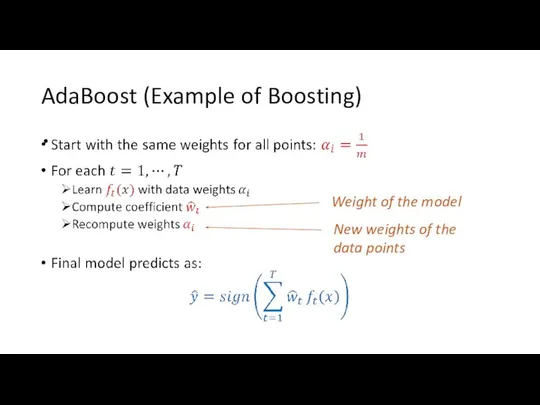

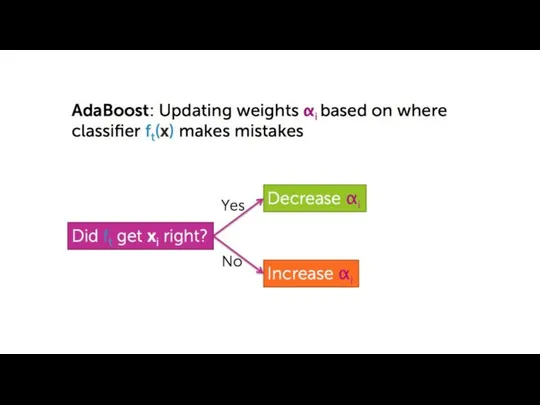

- 45. AdaBoost (Example of Boosting) Weight of the model New weights of the data points

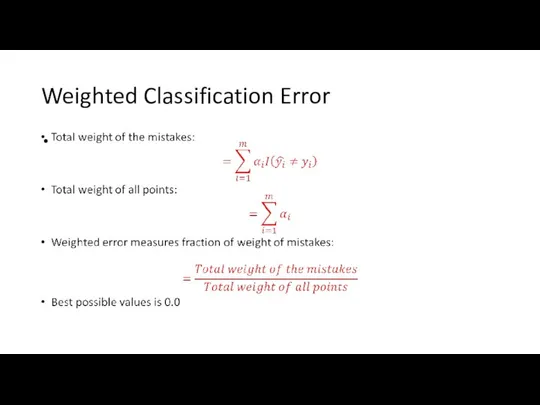

- 47. Weighted Classification Error

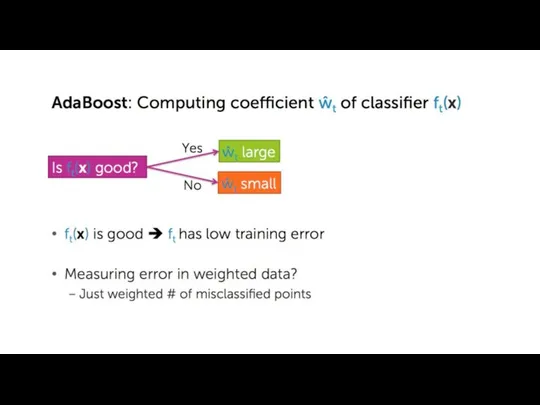

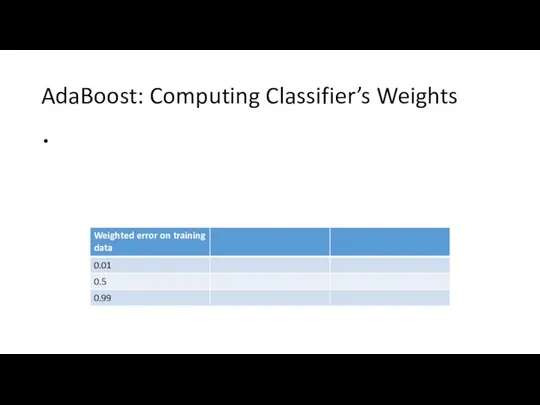

- 48. AdaBoost: Computing Classifier’s Weights

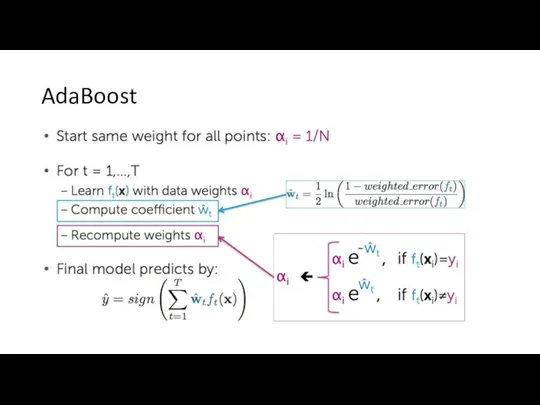

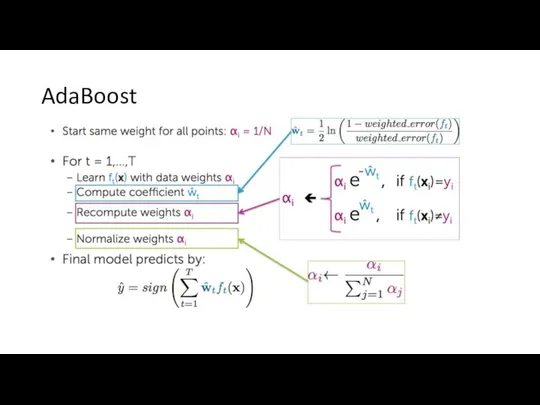

- 49. AdaBoost

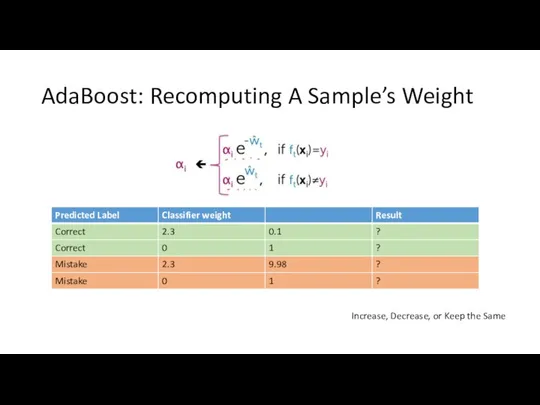

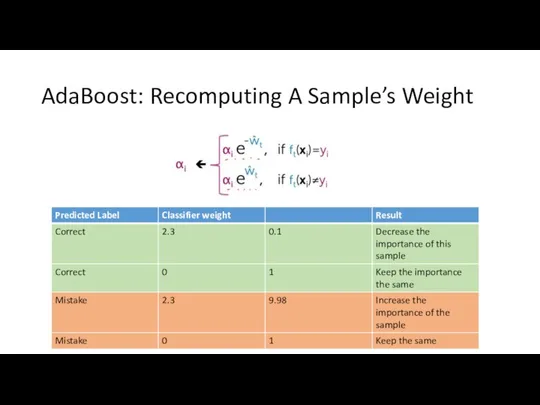

- 51. AdaBoost: Recomputing A Sample’s Weight Increase, Decrease, or Keep the Same

- 52. AdaBoost: Recomputing A Sample’s Weight

- 53. AdaBoost

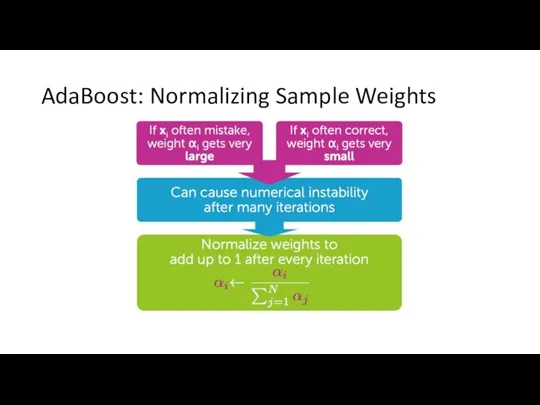

- 54. AdaBoost: Normalizing Sample Weights

- 55. AdaBoost

- 56. Self Study What is the effect of of: Increasing the number of classifiers in bagging vs.

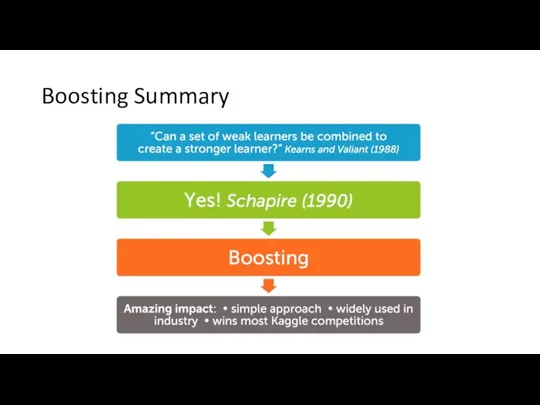

- 57. Boosting Summary

- 59. Скачать презентацию

Презентация "Презентация на тему: викторина по мультфильмам" - скачать презентации по Экономике

Презентация "Презентация на тему: викторина по мультфильмам" - скачать презентации по Экономике «НОВЫЕ ПОХОЖДЕНИЯ ВЫПУСКНИКОВ, ИЛИ УДИВИТЕЛЬНОЕ ПУТЕШЕСТВИЕ ПО ОКЕАНУ ЗНАНИЙ»

«НОВЫЕ ПОХОЖДЕНИЯ ВЫПУСКНИКОВ, ИЛИ УДИВИТЕЛЬНОЕ ПУТЕШЕСТВИЕ ПО ОКЕАНУ ЗНАНИЙ» Предприятие АО "РКЦ "Прогресс"

Предприятие АО "РКЦ "Прогресс" Я нарисую красками СУДЬБУ

Я нарисую красками СУДЬБУ  ЕДИНЫЙ ГОСУДАРСТВЕННЫЙ ЭКЗАМЕН: ПСИХОЛОГО-ПЕДАГОГИЧЕСКАЯ ПОДГОТОВКА УЧАЩИХСЯ И РОДИТЕЛЕЙ Барбитова А.Д.

ЕДИНЫЙ ГОСУДАРСТВЕННЫЙ ЭКЗАМЕН: ПСИХОЛОГО-ПЕДАГОГИЧЕСКАЯ ПОДГОТОВКА УЧАЩИХСЯ И РОДИТЕЛЕЙ Барбитова А.Д. Открытие ворот

Открытие ворот Минеральные удобрени - нитраты

Минеральные удобрени - нитраты Системный характер языка. Уровни и единицы языковой системы

Системный характер языка. Уровни и единицы языковой системы Способы измерения бытовой коррупции

Способы измерения бытовой коррупции Презентация по алгебре Теорема синусов

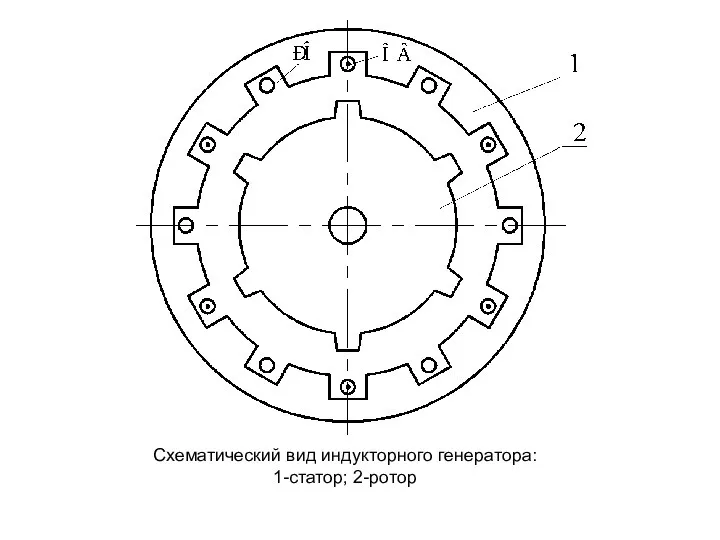

Презентация по алгебре Теорема синусов  Инд генератор

Инд генератор  Вишакха Пуджа

Вишакха Пуджа Примеры торговых марок

Примеры торговых марок Патентоспособность и патентная чистота. Защита прав на ИЗ, ПМ, ПО. Тема 4_Раздел 4 и 5_

Патентоспособность и патентная чистота. Защита прав на ИЗ, ПМ, ПО. Тема 4_Раздел 4 и 5_ Основы деятельности финансового менеджера

Основы деятельности финансового менеджера Протокольная работа. Роль протокольной работы в дипломатической деятельности

Протокольная работа. Роль протокольной работы в дипломатической деятельности Окончание процедуры банкротства и права кредиторов. (Лекция 6)

Окончание процедуры банкротства и права кредиторов. (Лекция 6) Национальное богатство

Национальное богатство  Методологические подходы в психологии

Методологические подходы в психологии органоиды1

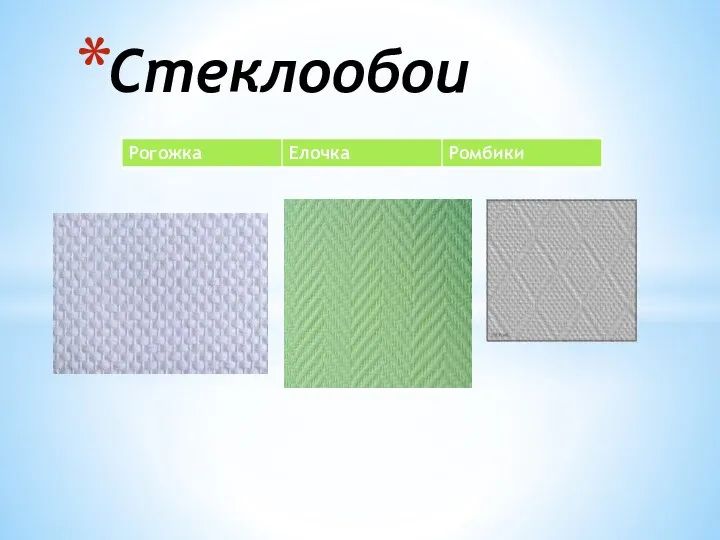

органоиды1 Стеклообои

Стеклообои Методы обследования больных в клинике ортопедической стоматологии

Методы обследования больных в клинике ортопедической стоматологии Буферные системы

Буферные системы Внеклассное занятие к неделе географии

Внеклассное занятие к неделе географии Пересечение поверхностей

Пересечение поверхностей Защита персональных данных

Защита персональных данных Культурное пространство

Культурное пространство Применение дифракционного метода суммирования в геолокации

Применение дифракционного метода суммирования в геолокации