Содержание

- 2. Overview What is this paper all about? Key ideas from the title: Context-Free Parsing Probabilistic Computes

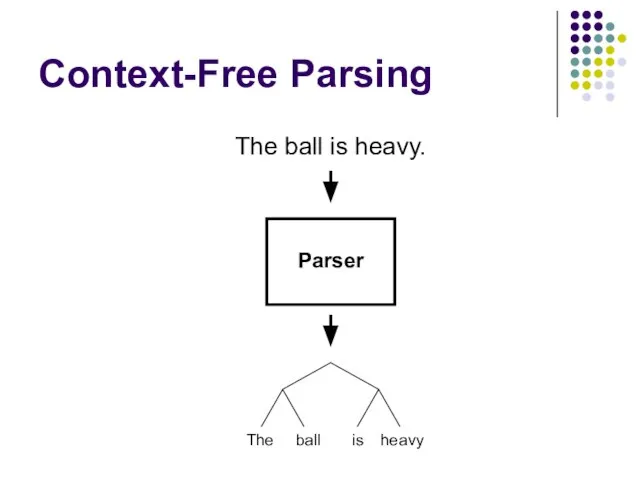

- 3. Context-Free Parsing The ball is heavy. Parser The ball is heavy

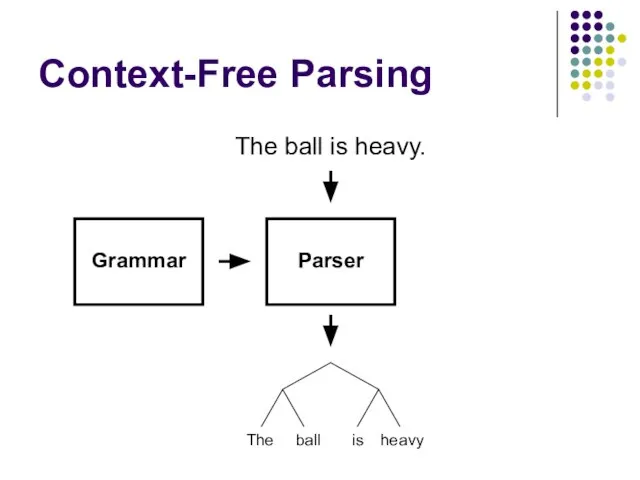

- 4. Context-Free Parsing Parser Grammar The ball is heavy. The ball is heavy

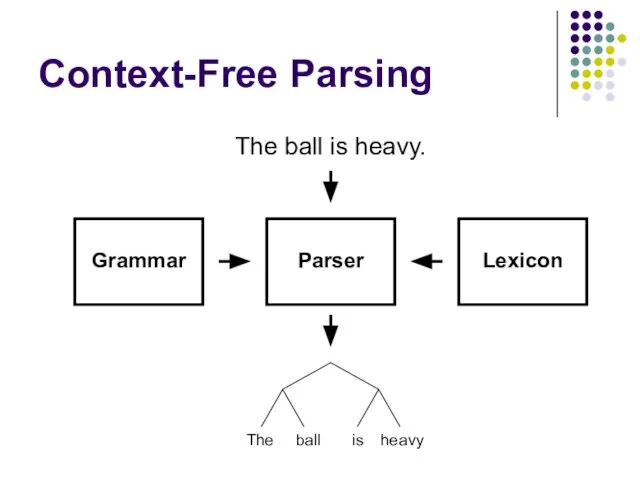

- 5. Context-Free Parsing Parser Grammar Lexicon The ball is heavy. The ball is heavy

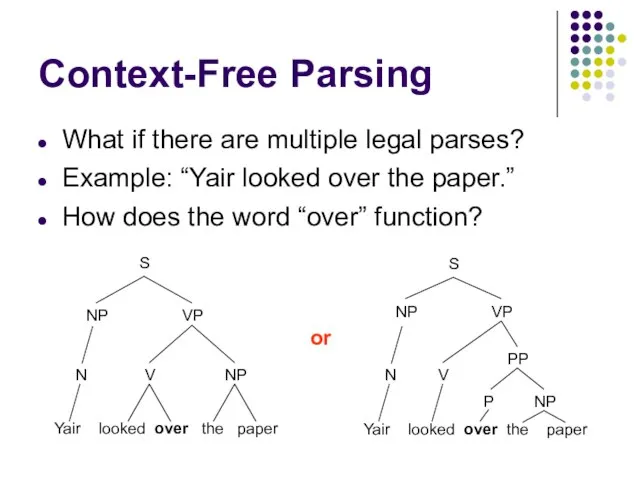

- 6. Context-Free Parsing What if there are multiple legal parses? Example: “Yair looked over the paper.” How

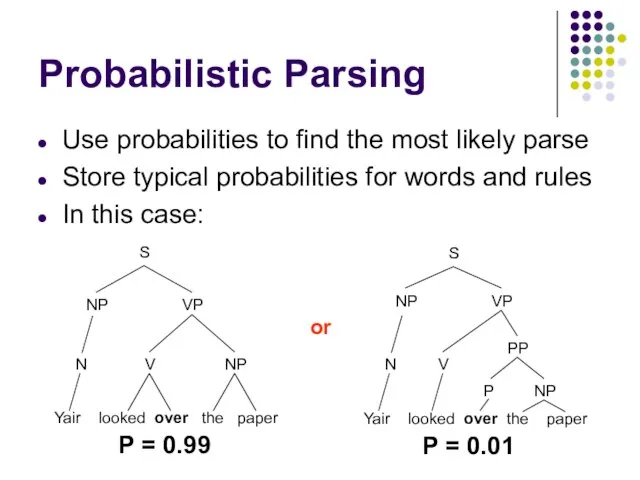

- 7. Probabilistic Parsing Use probabilities to find the most likely parse Store typical probabilities for words and

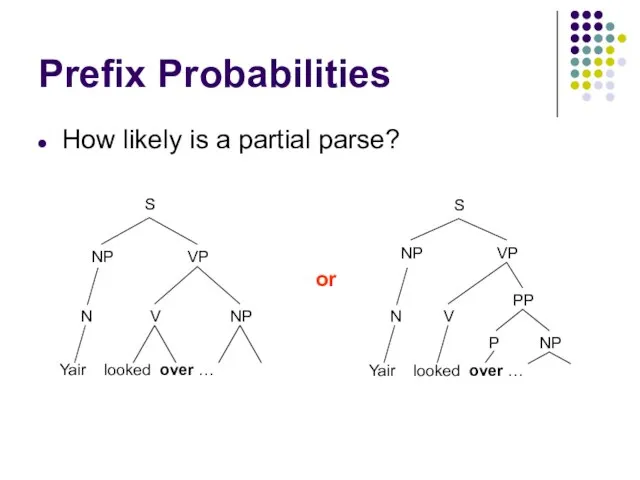

- 8. Prefix Probabilities How likely is a partial parse? Yair looked over … Yair looked over …

- 9. Efficiency The Earley algorithm (upon which Stolcke builds) is one of the most efficient known parsing

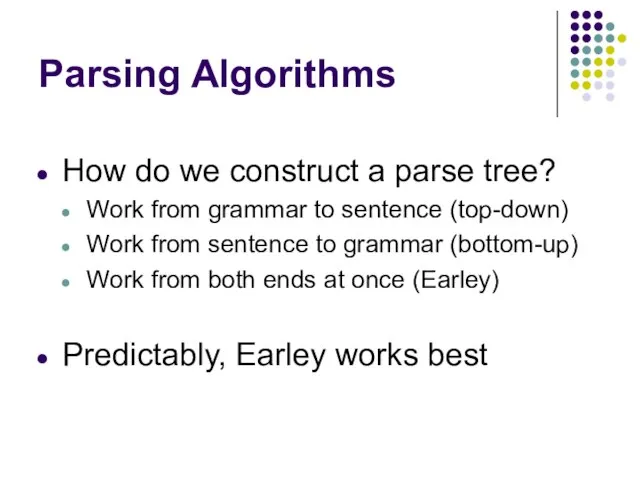

- 10. Parsing Algorithms How do we construct a parse tree? Work from grammar to sentence (top-down) Work

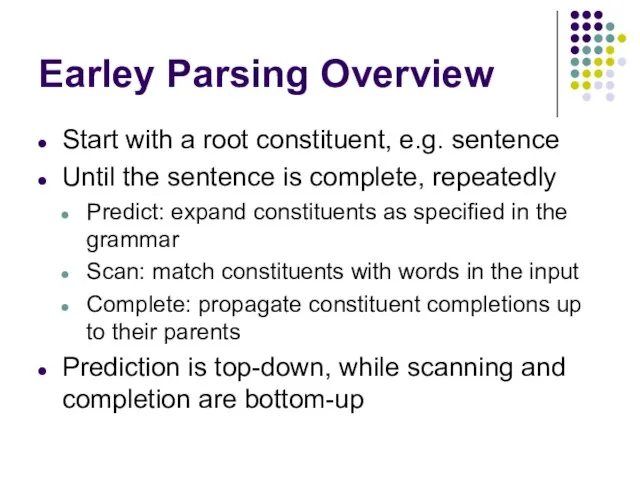

- 11. Earley Parsing Overview Start with a root constituent, e.g. sentence Until the sentence is complete, repeatedly

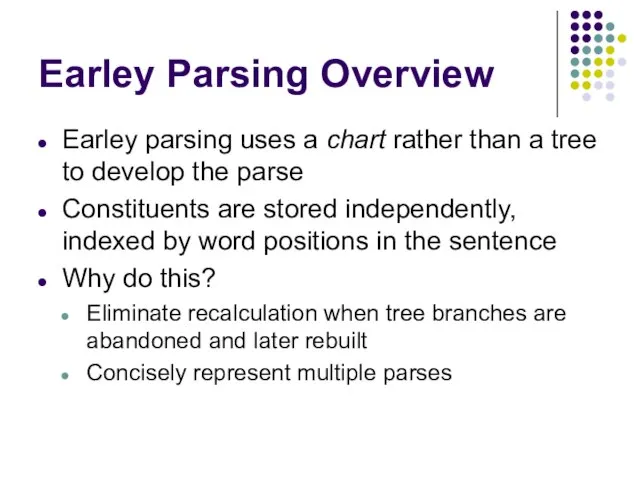

- 12. Earley Parsing Overview Earley parsing uses a chart rather than a tree to develop the parse

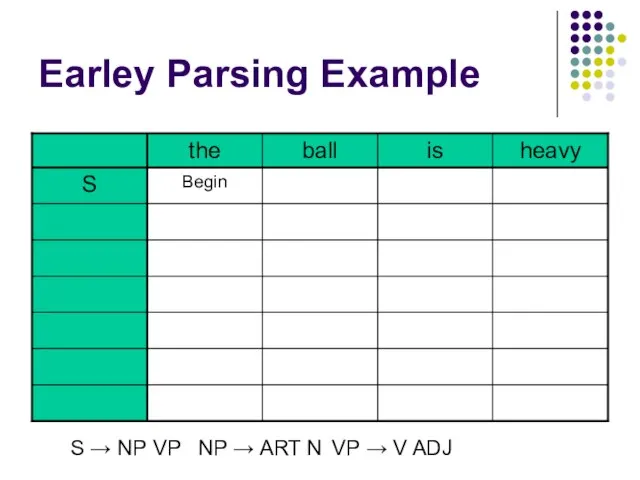

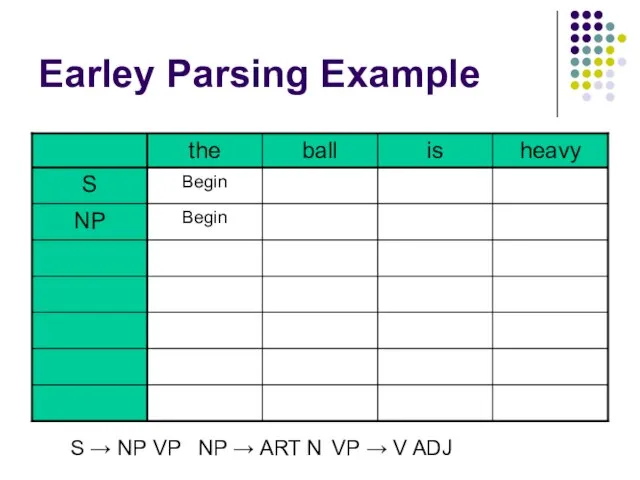

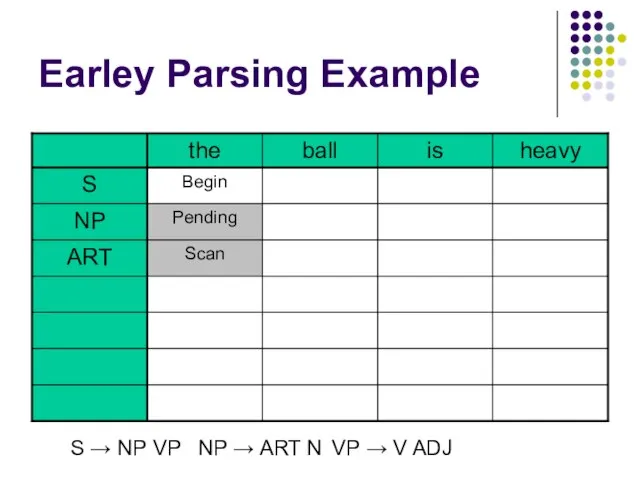

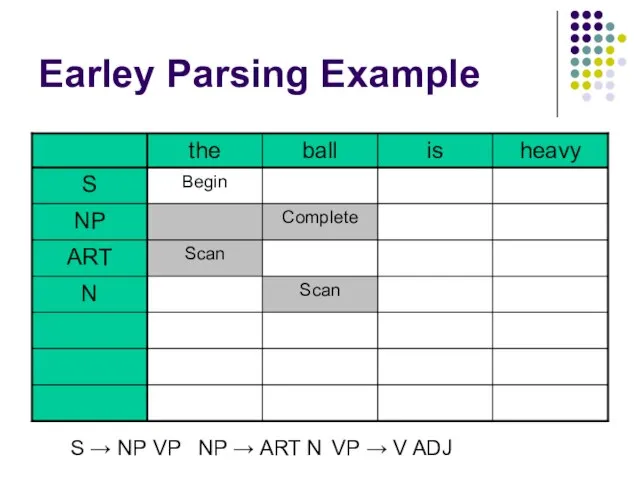

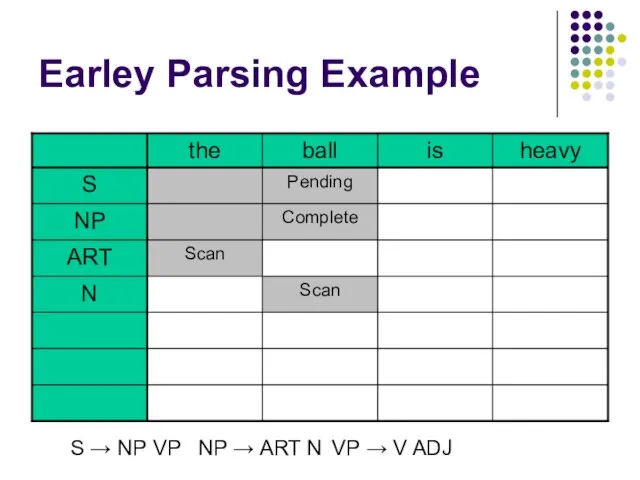

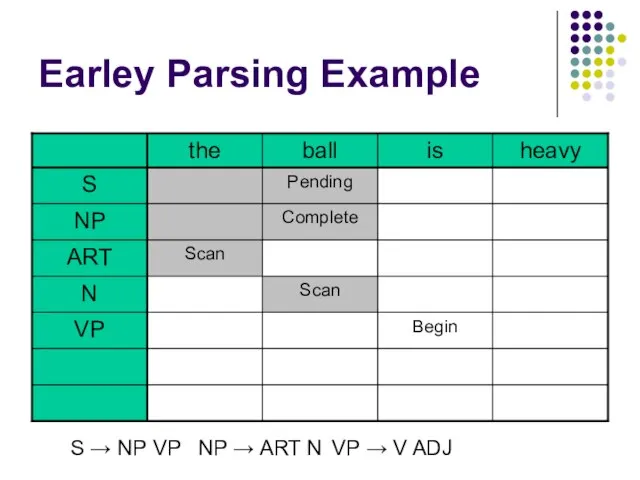

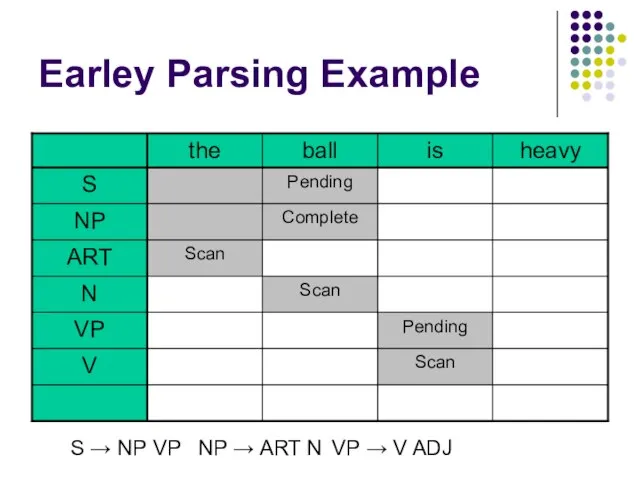

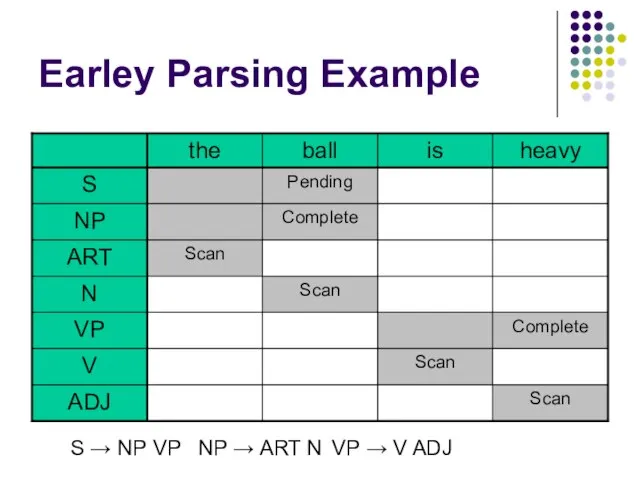

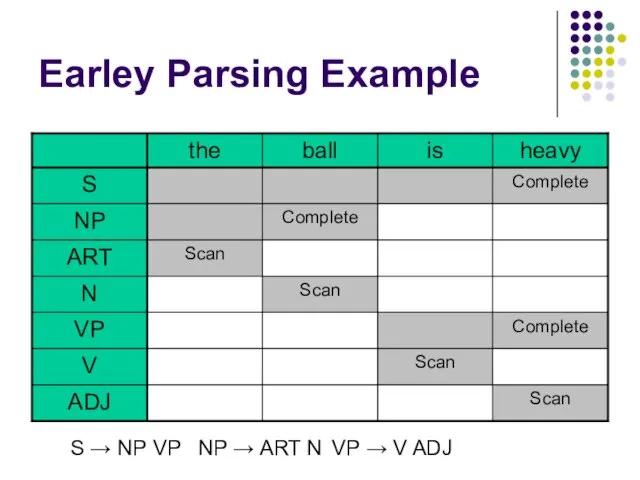

- 13. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 14. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 15. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 16. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 17. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 18. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 19. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 20. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 21. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 22. Probabilistic Parsing How do we parse probabilistically? Assign probabilities to grammar rules and words in lexicon

- 23. Probabilistic Parsing Terminology Earley state: each step of the processing that a constituent undergoes. Examples: Starting

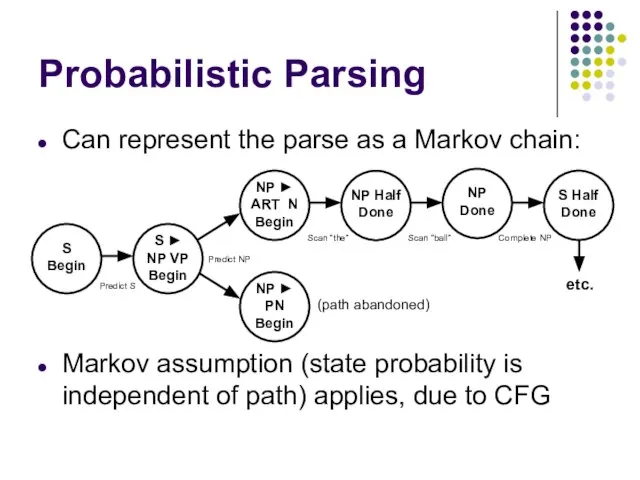

- 24. etc. Probabilistic Parsing Can represent the parse as a Markov chain: Markov assumption (state probability is

- 25. Probabilistic Parsing Every Earley path corresponds to a parse tree P(tree) = P(path) Assign a probability

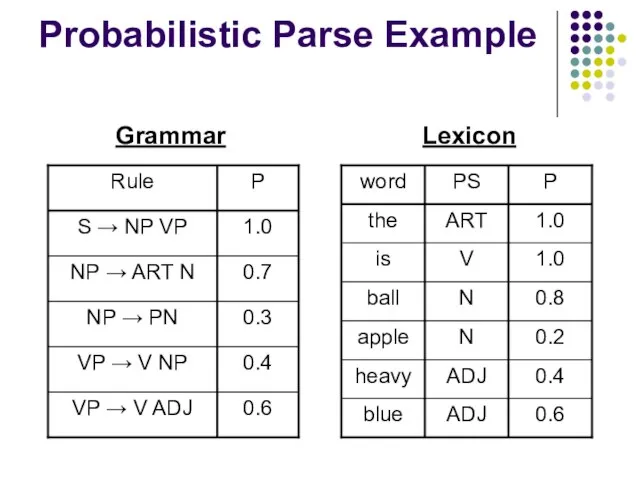

- 26. Probabilistic Parse Example Grammar Lexicon

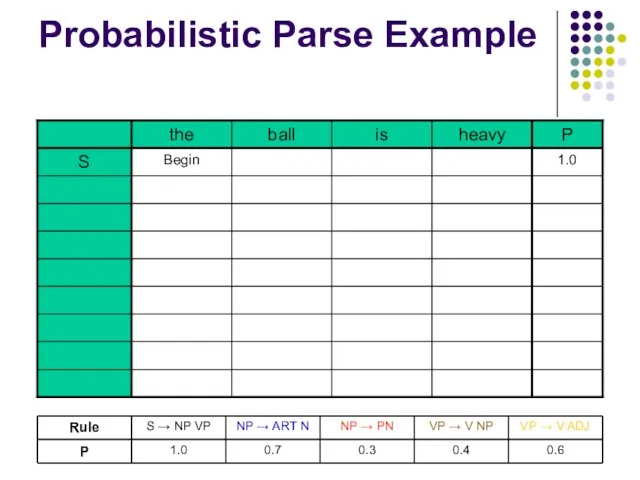

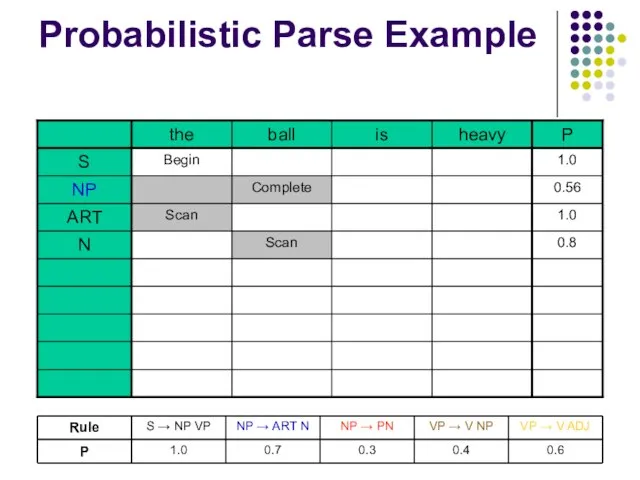

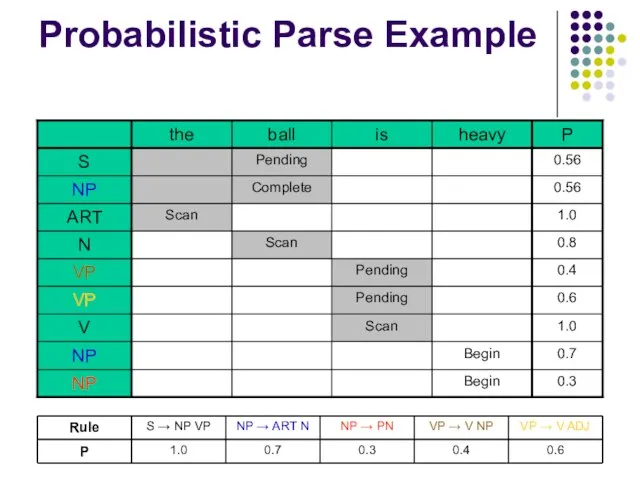

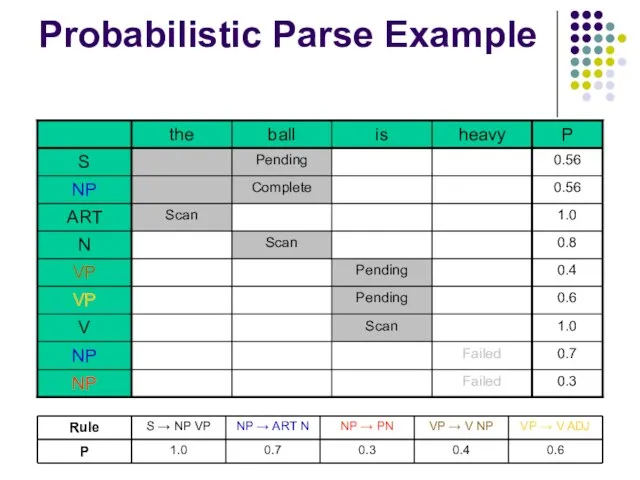

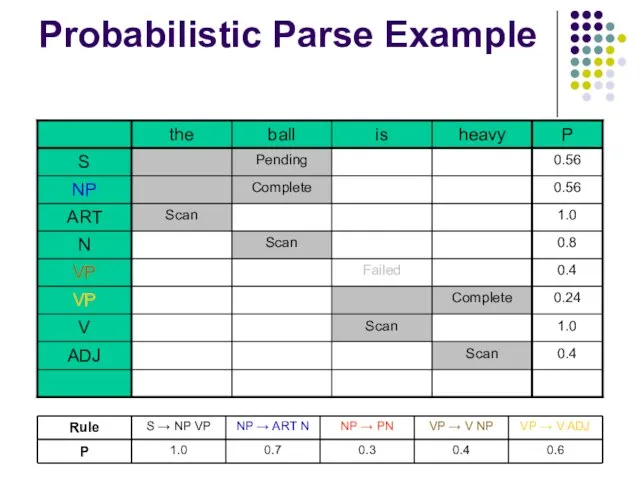

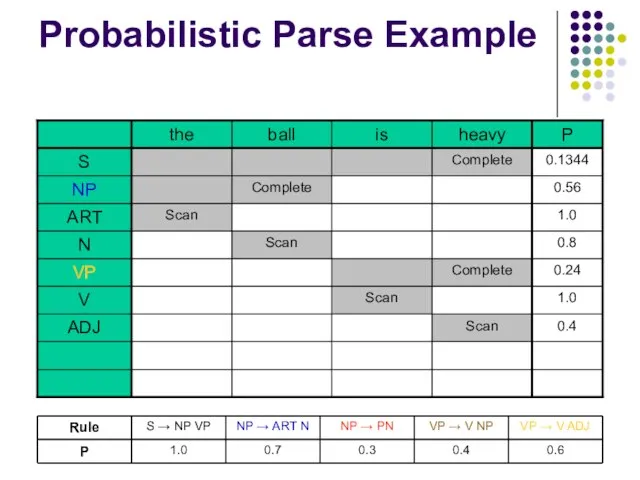

- 27. Probabilistic Parse Example

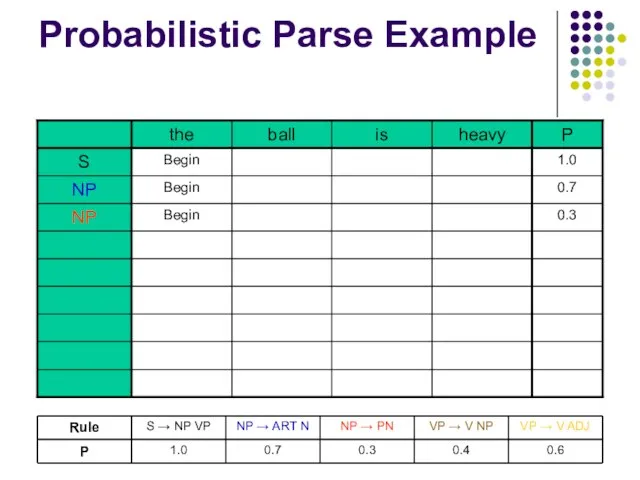

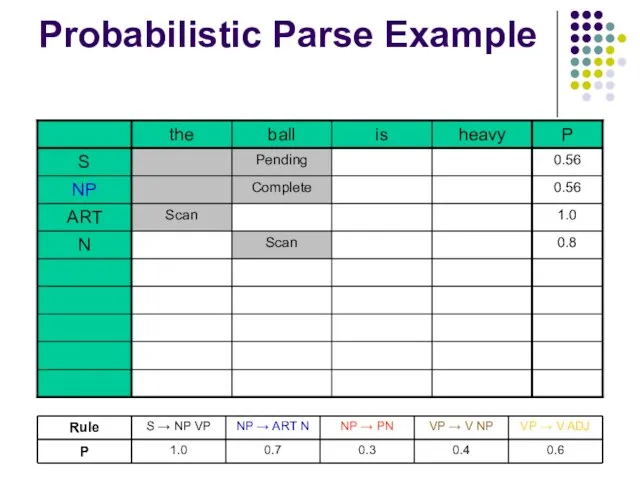

- 28. Probabilistic Parse Example

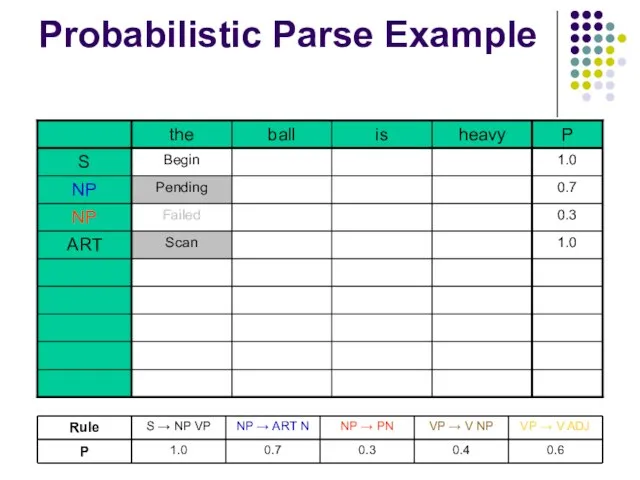

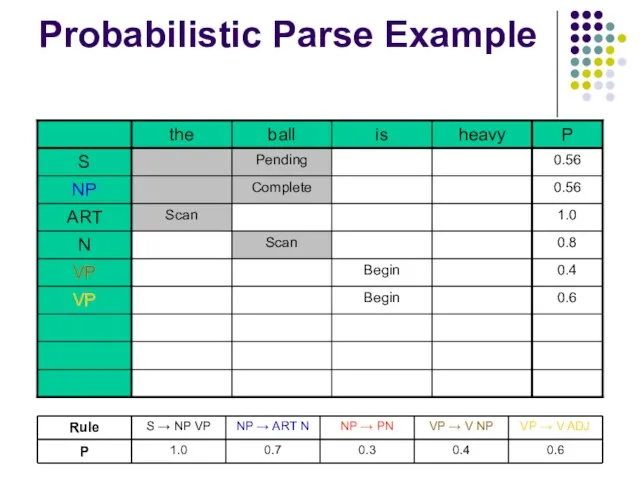

- 29. Probabilistic Parse Example

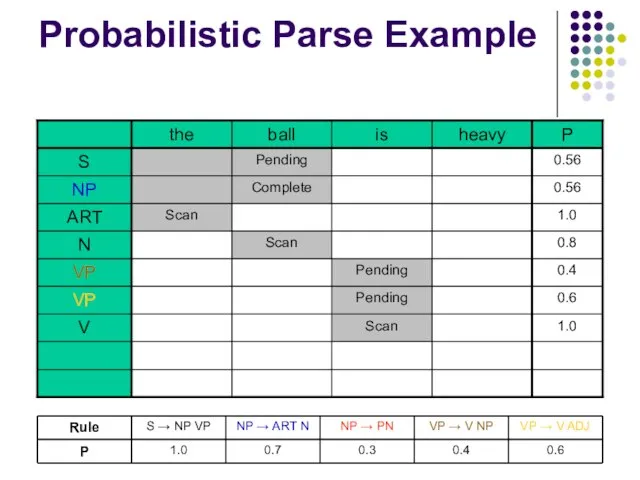

- 30. Probabilistic Parse Example

- 31. Probabilistic Parse Example

- 32. Probabilistic Parse Example

- 33. Probabilistic Parse Example

- 34. Probabilistic Parse Example

- 35. Probabilistic Parse Example

- 36. Probabilistic Parse Example

- 37. Probabilistic Parse Example

- 38. Prefix Probabilities Current algorithm reports parse tree probability when the sentence is completed What if we

- 39. Prefix Probabilities Solution: add a separate path probability Same as before, but propagate down on prediction

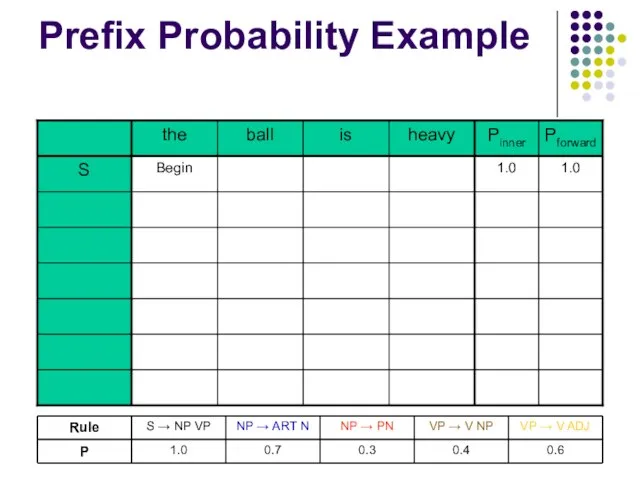

- 40. Prefix Probability Example

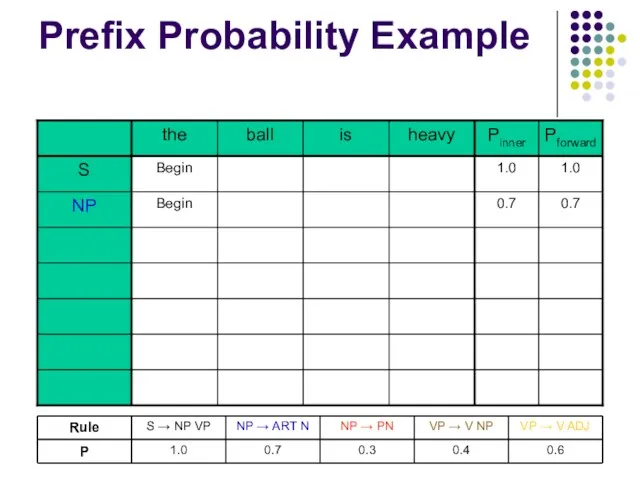

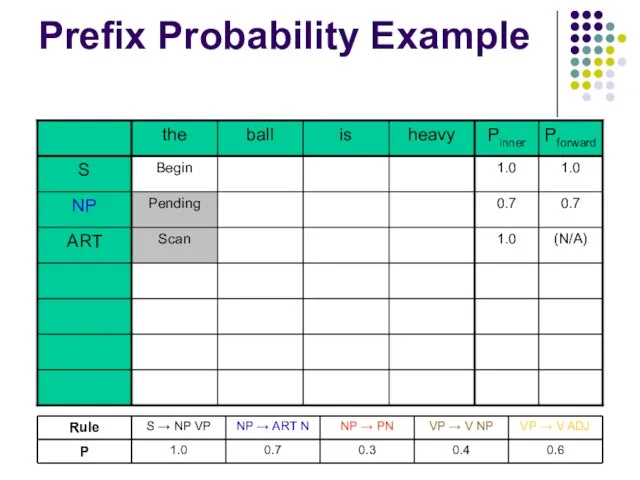

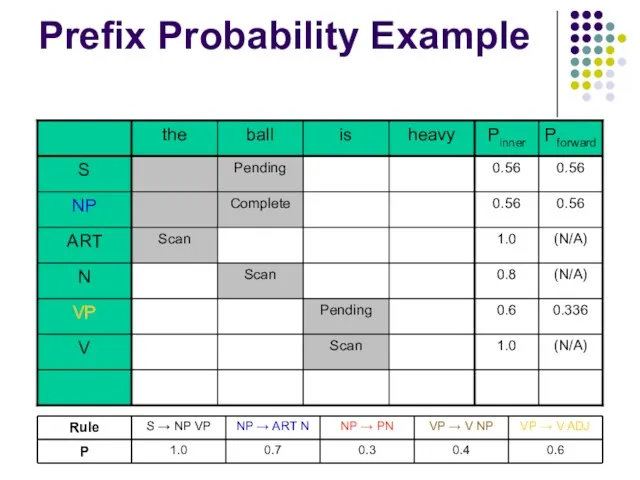

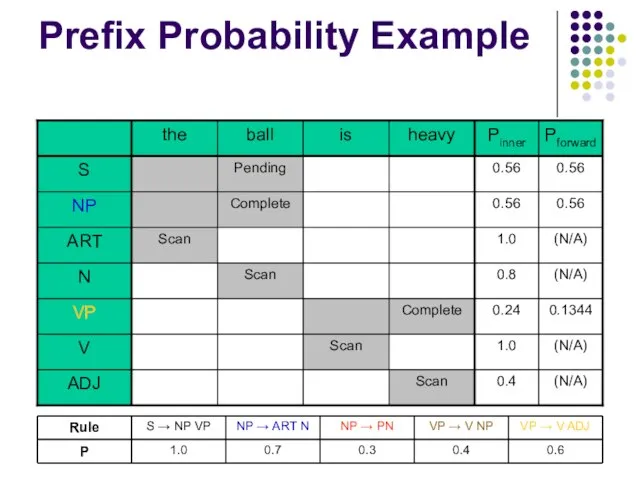

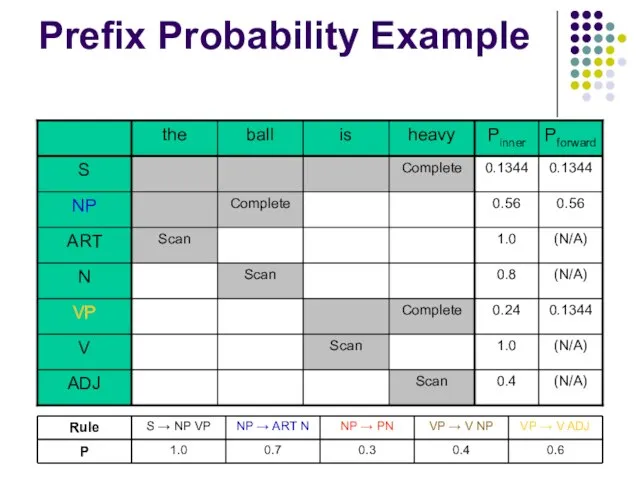

- 41. Prefix Probability Example

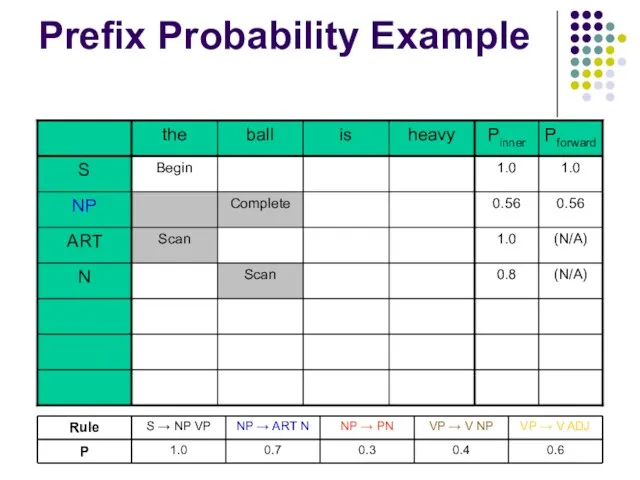

- 42. Prefix Probability Example

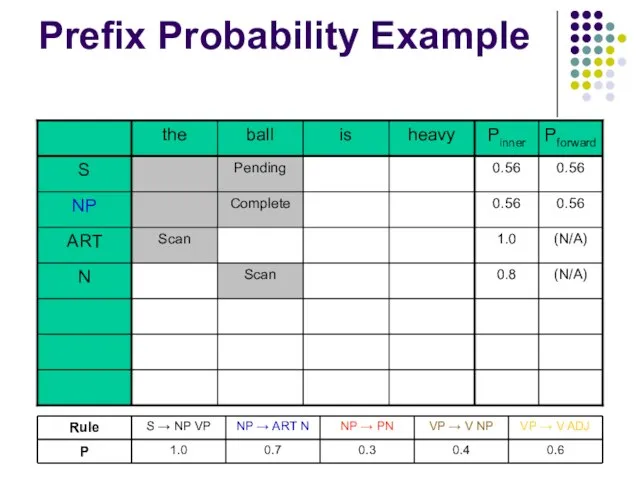

- 43. Prefix Probability Example

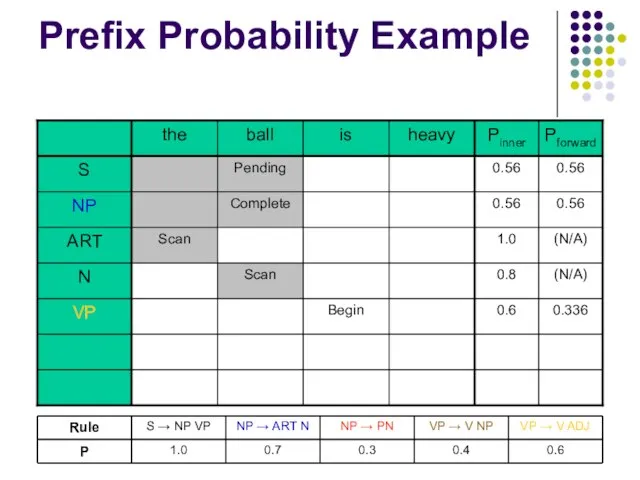

- 44. Prefix Probability Example

- 45. Prefix Probability Example

- 46. Prefix Probability Example

- 47. Prefix Probability Example

- 48. Prefix Probability Example

- 50. Скачать презентацию

Передача модальности при переводе текста по специальности

Передача модальности при переводе текста по специальности The passive voice

The passive voice Conditional clauses. Type 3. Game

Conditional clauses. Type 3. Game Презентация по английскому языку Past Perfect Tense

Презентация по английскому языку Past Perfect Tense  Some Glimpses of American History Презентация по курсу страноведения для учащихся школ с углублённым изучением английского языка (6-й год изуч

Some Glimpses of American History Презентация по курсу страноведения для учащихся школ с углублённым изучением английского языка (6-й год изуч GRUNGERS AND PREPPIES Урок английского языка в 9 классе МБОУ «Ново-Снопковская ООШ» Учитель: Коробко И.Ю.

GRUNGERS AND PREPPIES Урок английского языка в 9 классе МБОУ «Ново-Снопковская ООШ» Учитель: Коробко И.Ю. We must protect our environment. Konditerov Sergey

We must protect our environment. Konditerov Sergey New Zealand’s Specials: A Future Sociologist’s View Made by Olena Dazhura

New Zealand’s Specials: A Future Sociologist’s View Made by Olena Dazhura  school radio station alternative current/direct current

school radio station alternative current/direct current What іs this

What іs this Еда, игрушки, цифры, мебель повторение. Английский язык

Еда, игрушки, цифры, мебель повторение. Английский язык The Gerund Герундий

The Gerund Герундий  Lesson 13. Rainbow

Lesson 13. Rainbow Prefix un-

Prefix un- World cuisine

World cuisine Prepared by the pupil of the 11th form of Krasnopillya gymnasia Holub Margaryta

Prepared by the pupil of the 11th form of Krasnopillya gymnasia Holub Margaryta  Презентация к уроку английского языка "My favourite actress is" - скачать

Презентация к уроку английского языка "My favourite actress is" - скачать  Учитель английского языка ГБОУ СОШ № 797 г. Москвы Дианова Наталья Михайловна

Учитель английского языка ГБОУ СОШ № 797 г. Москвы Дианова Наталья Михайловна Halloween Game

Halloween Game Presents and wishes Intermediate low

Presents and wishes Intermediate low CONDITIONALS

CONDITIONALS Three-phase AC machines

Three-phase AC machines How often

How often Александр Николаевич Радищев 1749-1802

Александр Николаевич Радищев 1749-1802 Conditional sentences (условные предложения)

Conditional sentences (условные предложения) How we work

How we work Translator

Translator  First conditional

First conditional