Содержание

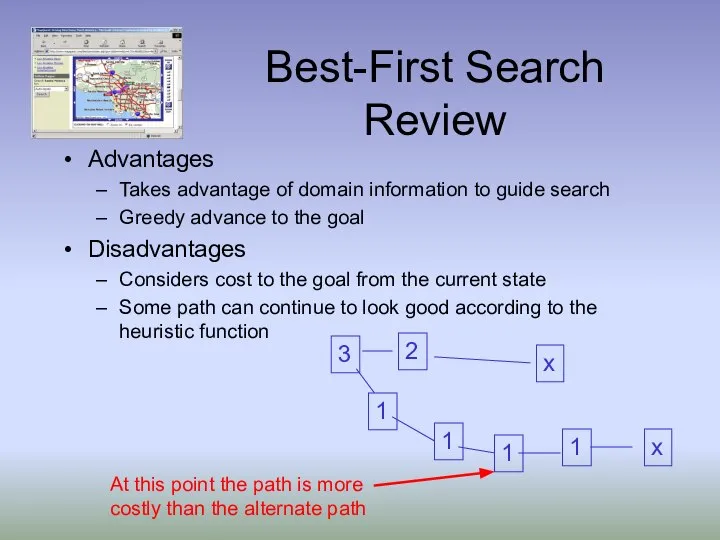

- 2. Best-First Search Review Advantages Takes advantage of domain information to guide search Greedy advance to the

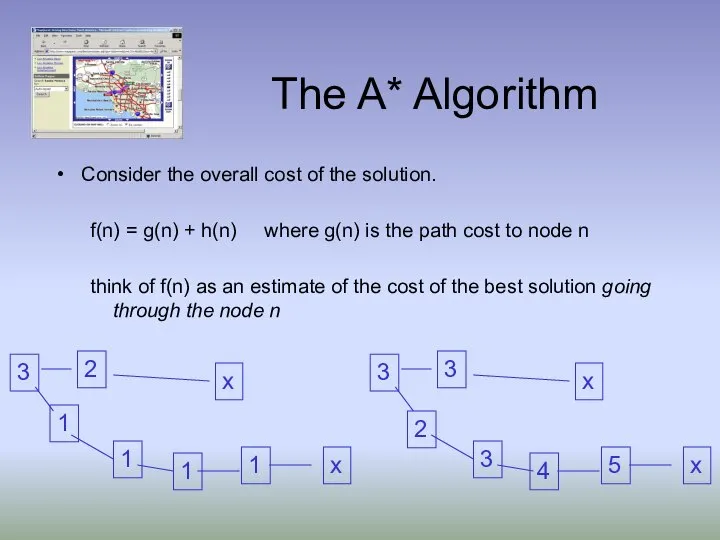

- 3. The A* Algorithm Consider the overall cost of the solution. f(n) = g(n) + h(n) where

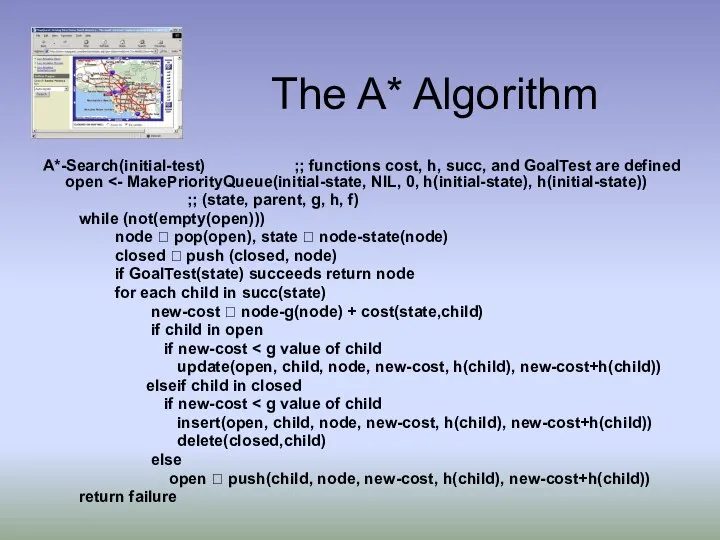

- 4. The A* Algorithm A*-Search(initial-test) ;; functions cost, h, succ, and GoalTest are defined open ;; (state,

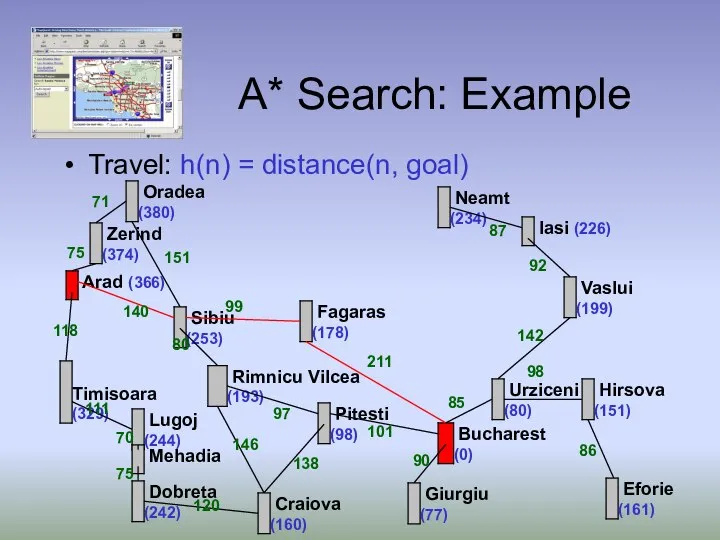

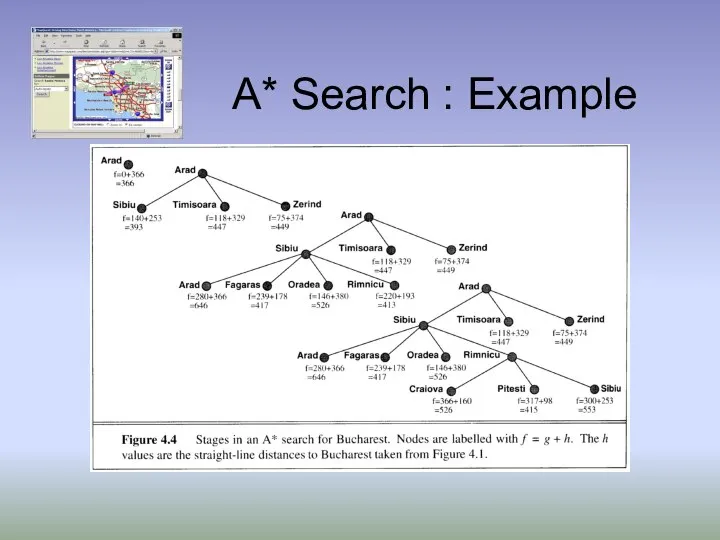

- 5. A* Search: Example Travel: h(n) = distance(n, goal) 71 142 85 90 101 97 99 140

- 6. A* Search : Example

- 7. Admissible Heuristics we also require h be admissible: a heuristic h is admissible if h(n) where

- 8. Optimality of A* Let us assume that f is non-decreasing along each path if not, simply

- 9. Completeness of A* Suppose there is a goal state G with path cost f* Intuitively: since

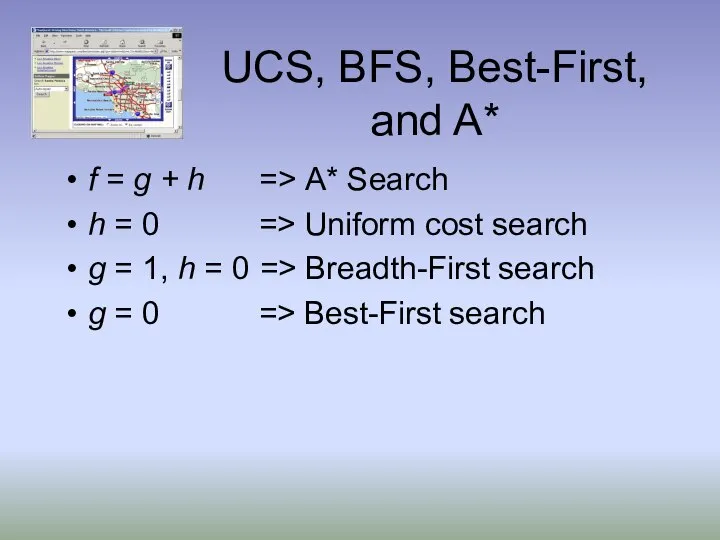

- 10. UCS, BFS, Best-First, and A* f = g + h => A* Search h = 0

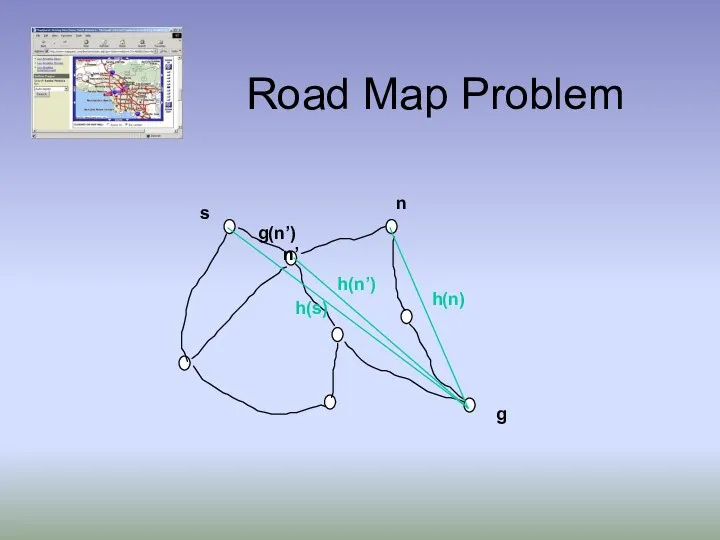

- 11. Road Map Problem s g h(s) n h(n) n’ h(n’) g(n’)

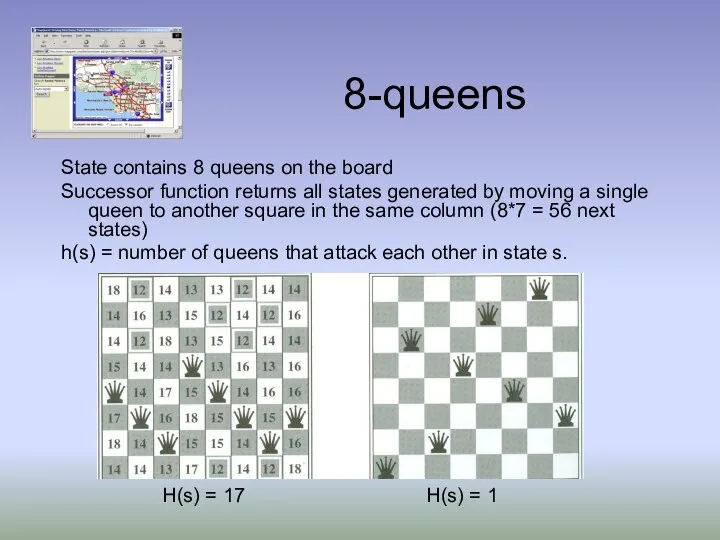

- 12. 8-queens State contains 8 queens on the board Successor function returns all states generated by moving

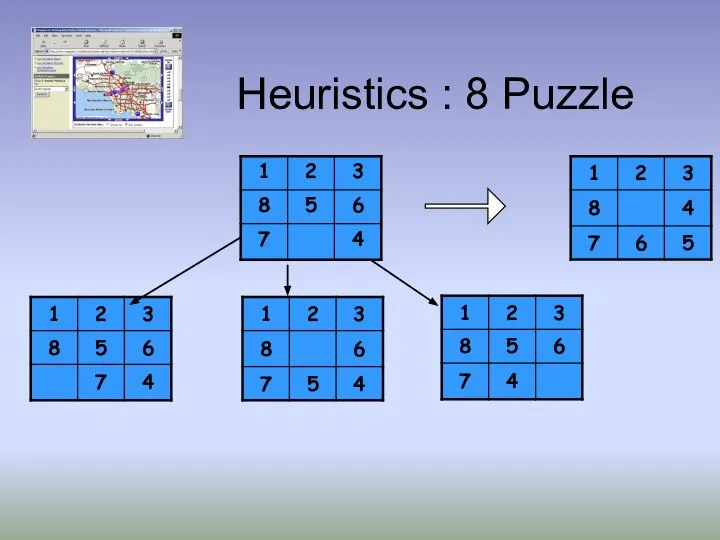

- 13. Heuristics : 8 Puzzle

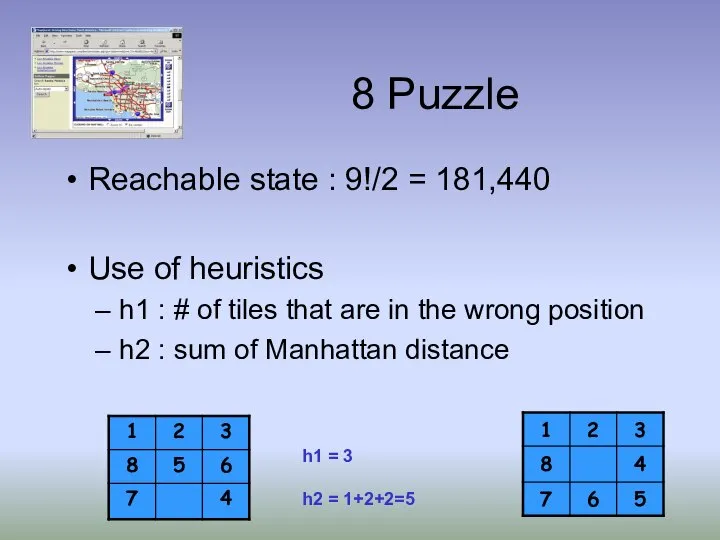

- 14. 8 Puzzle Reachable state : 9!/2 = 181,440 Use of heuristics h1 : # of tiles

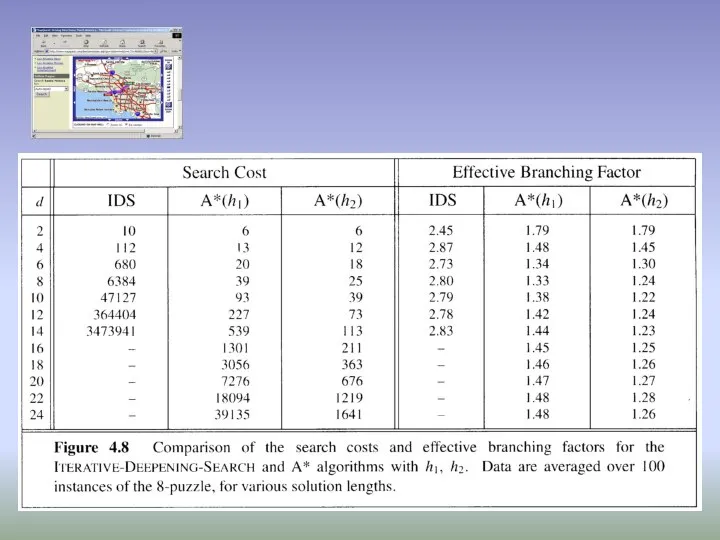

- 15. Effect of Heuristic Accuracy on Performance Well-designed heuristic have its branch close to 1 h2 dominates

- 17. A* summary Completeness provided finite branching factor and finite cost per operator Optimality provided we use

- 18. Relax Optimality Goals: Minimizing search cost Satisficing solution, i.e. bounded error in the solution f(s) =

- 19. Iterative Deepening A* Goals A storage efficient algorithm that we can use in practice Still complete

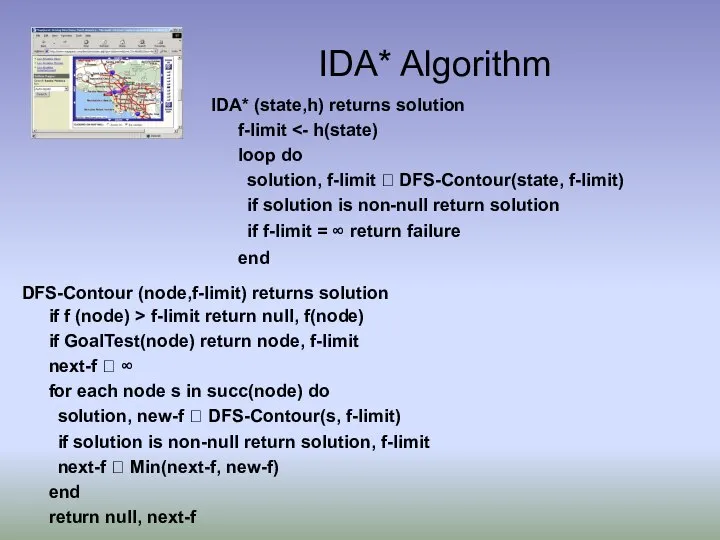

- 20. IDA* Algorithm IDA* (state,h) returns solution f-limit loop do solution, f-limit ? DFS-Contour(state, f-limit) if solution

- 21. IDA* Properties Complete: if shortest path fits into memory Optimal: if shortest optimal path fits into

- 23. Скачать презентацию

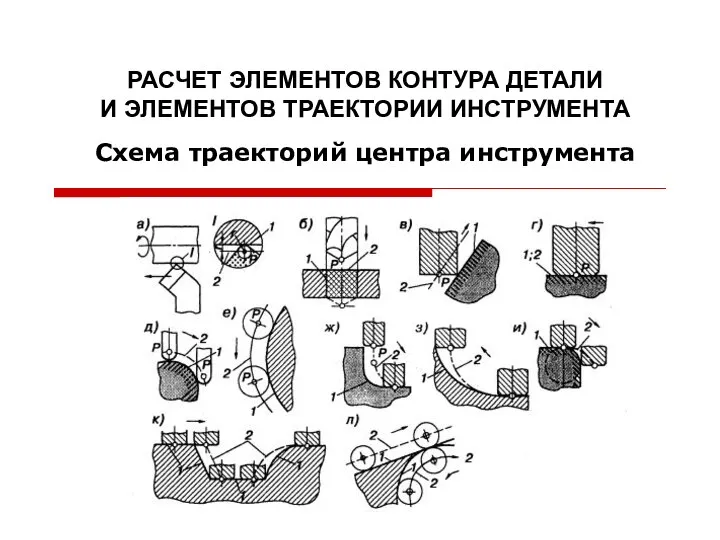

Расчет элементов контура детали и элементов траектории инструмента. Схема траекторий центра инструмента (02)

Расчет элементов контура детали и элементов траектории инструмента. Схема траекторий центра инструмента (02) Сводная отчетность

Сводная отчетность Промышленные сети

Промышленные сети Предмет: информатика Класс: 10-11 Тема урока: Оптимизационное моделирование в электронных таблицах Excel 2007 Крячко София Викторовна У

Предмет: информатика Класс: 10-11 Тема урока: Оптимизационное моделирование в электронных таблицах Excel 2007 Крячко София Викторовна У Представление и кодирование информации с помощью знаковых систем

Представление и кодирование информации с помощью знаковых систем InsureTech как драйвер развития страхового рынка

InsureTech как драйвер развития страхового рынка Что стало с читами в видеоиграх

Что стало с читами в видеоиграх Процеси. Process Control Block і контекст процесу

Процеси. Process Control Block і контекст процесу Электронные таблицы в медицинской статистике

Электронные таблицы в медицинской статистике Информационно-технический проект

Информационно-технический проект Условный оператор. Инструкция if. Множественное ветвление

Условный оператор. Инструкция if. Множественное ветвление Всемирная паутина, как информационное хранилище

Всемирная паутина, как информационное хранилище Алгоритмы

Алгоритмы Информационные технологии в учебном процессе

Информационные технологии в учебном процессе Рекламная и декоративная светотехника

Рекламная и декоративная светотехника Типы алгоритмов. Линейный алгоритм

Типы алгоритмов. Линейный алгоритм Random.Randint

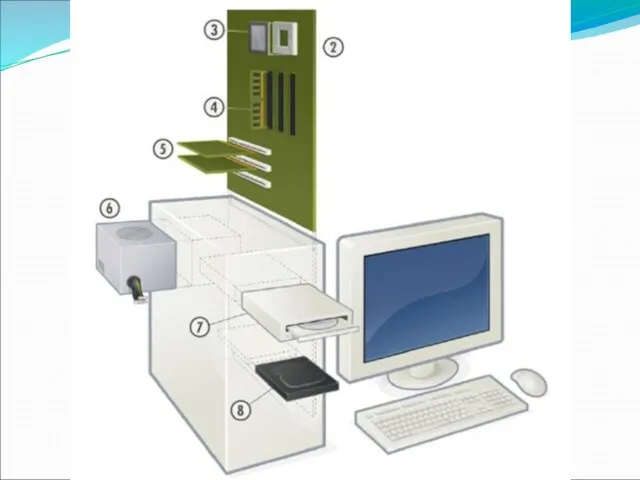

Random.Randint Конструктор. Офисный ПК. Игровой ПК

Конструктор. Офисный ПК. Игровой ПК Умные города на примере Торонто и Нью-Йорка

Умные города на примере Торонто и Нью-Йорка Передача заданий между смежными отделами в электронном виде при проектировании в ПК AVEVA

Передача заданий между смежными отделами в электронном виде при проектировании в ПК AVEVA CSS-селекторы

CSS-селекторы Учебный курс Хранилища данных Лекция 1 Понятия о хранилищах Лекции читает Кандидат технических наук, доцент Перминов Генн

Учебный курс Хранилища данных Лекция 1 Понятия о хранилищах Лекции читает Кандидат технических наук, доцент Перминов Генн Порядок участия в торгах на электронных площадках

Порядок участия в торгах на электронных площадках Стандартизація та сертифікація інформаційних управляючих систем

Стандартизація та сертифікація інформаційних управляючих систем Тема: Решение задач с условным оператором. Цели: Научить решать задачи с условным оператором. Развивать умения составлять п

Тема: Решение задач с условным оператором. Цели: Научить решать задачи с условным оператором. Развивать умения составлять п Введение в UML. UML. (Unified Modeling Language) – унифицированный язык моделирования

Введение в UML. UML. (Unified Modeling Language) – унифицированный язык моделирования Алгоритмическая структура «цикл»

Алгоритмическая структура «цикл» Первый Бит

Первый Бит