Predicting future outputs — machine learning

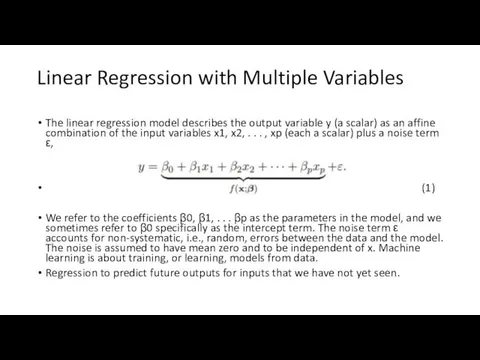

In machine learning, the emphasis

is rather on predicting some (not yet seen) output y*? for some new input x* = [x*1 x*2 . . . x*p ] T. To make a prediction for a test input x* , we insert it into the model (1). Since ε (by assumption) has mean value zero, we take the prediction as

We use the symbol ^ on y * to indicate that it is a prediction, our best guess. If we were able to somehow observe the actual output from x *, we would denote it by y * (without a hat).

Приёмы устных вычислений вида: 470+80, 560-90

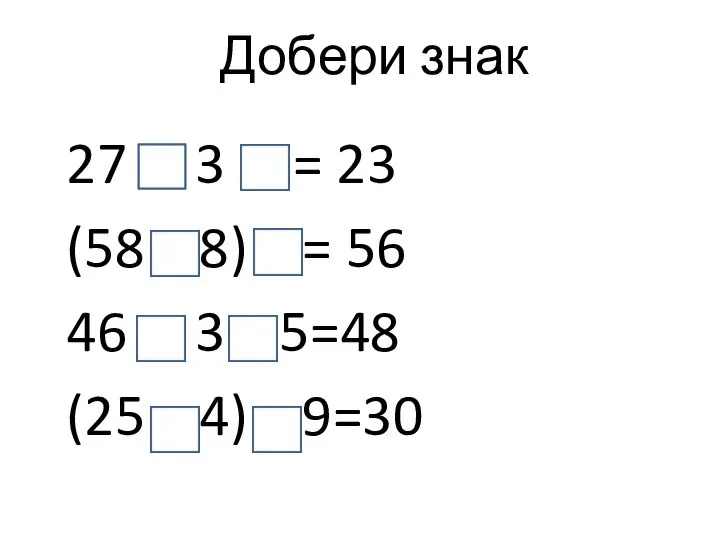

Приёмы устных вычислений вида: 470+80, 560-90 Добери знак

Добери знак Противоположные числа Какие числа называют противоположными? Как на координатной прямой располагаются точки, соответствующие п

Противоположные числа Какие числа называют противоположными? Как на координатной прямой располагаются точки, соответствующие п Элементы теории вероятностей

Элементы теории вероятностей Длина окружности. Коллекция задач для 6 класса

Длина окружности. Коллекция задач для 6 класса Матриці та дії над ними. Поняття і види матриць

Матриці та дії над ними. Поняття і види матриць Первообразная и неопределённый интеграл

Первообразная и неопределённый интеграл Свойства двойного интеграла

Свойства двойного интеграла Дифференциал функции

Дифференциал функции Метрология. Основные понятия

Метрология. Основные понятия Правильные многоугольники

Правильные многоугольники Арифметическая и геометрическая прогрессии Учитель математики МБОУ «Адаевская ООШ» Актанышского муниципального района Респу

Арифметическая и геометрическая прогрессии Учитель математики МБОУ «Адаевская ООШ» Актанышского муниципального района Респу Презентация по математике "Теорема Пифагора" -

Презентация по математике "Теорема Пифагора" -  Многогранники. Все формулы. Геометрия (10-11 класс)

Многогранники. Все формулы. Геометрия (10-11 класс) Математическое моделирование. Значимость коэффициентов регрессии

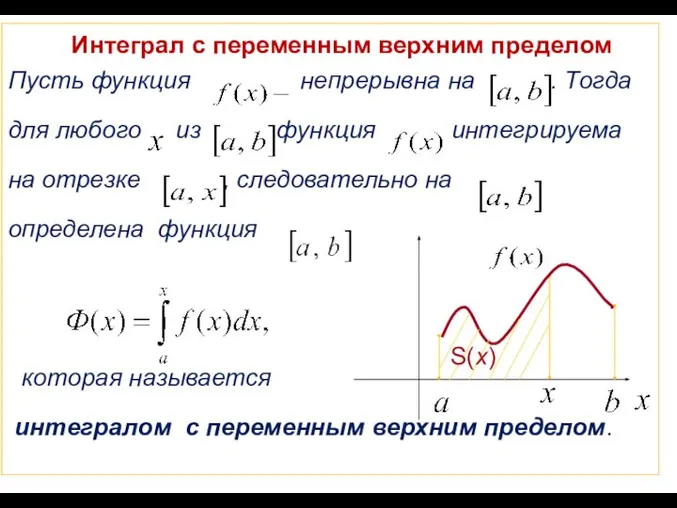

Математическое моделирование. Значимость коэффициентов регрессии Интеграл с переменным верхним пределом

Интеграл с переменным верхним пределом Количественные характеристики случайной величины. Описательная статистика. (Лекция 4)

Количественные характеристики случайной величины. Описательная статистика. (Лекция 4) Математические забавы

Математические забавы Построение информационной модели метода изготовления изделия

Построение информационной модели метода изготовления изделия Применение подобия к доказательству теорем и решению задач. Урок 38

Применение подобия к доказательству теорем и решению задач. Урок 38 Площа бічної та повної поверхонь конуса

Площа бічної та повної поверхонь конуса Математика вокруг нас. Внеклассное мероприятие

Математика вокруг нас. Внеклассное мероприятие Презентация по математике "Упрощение выражений 6 класс" - скачать

Презентация по математике "Упрощение выражений 6 класс" - скачать  Практикум по решению задачи №20 (базовый уровень). ЕГЭ

Практикум по решению задачи №20 (базовый уровень). ЕГЭ Размещение из N элементов по k (k ≤ n)

Размещение из N элементов по k (k ≤ n) Число 14. Многоугольники

Число 14. Многоугольники Иррациональные неравенства. Виды и способы решения

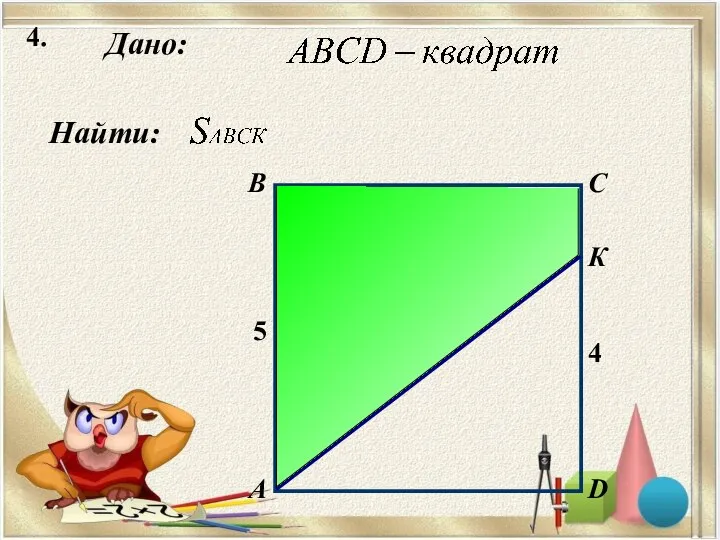

Иррациональные неравенства. Виды и способы решения Решение задач на площадь треугольника

Решение задач на площадь треугольника